What is Edge AI? and How Does it Work?

- What is edge AI?

Edge AI, short for Edge Artificial Intelligence, is a transformative approach to artificial intelligence that brings the power of AI algorithms directly to the devices where data is generated or collected, such as smartphones, cameras, sensors, or IoT devices. Unlike traditional AI systems that rely on centralized servers or cloud computing for data processing, Edge AI performs computation locally on the device itself, at the "edge" of the network.

How edge AI works and several of its advantages:

- Edge AI Reduces Latency: By processing data locally, Edge AI significantly reduces the time it takes to analyze and respond to information. This is particularly important in applications where real-time decision-making is critical, such as autonomous vehicles, industrial automation, or healthcare monitoring systems.

- Improved Privacy and Security: Since data is processed locally on the device, Edge AI minimizes the need to transmit sensitive information to remote servers, thereby enhancing privacy and security. This is especially beneficial in scenarios where data confidentiality is paramount, such as personal health monitoring or surveillance systems.

- Optimized Bandwidth Usage: Edge AI words to alleviate the strain on network bandwidth by performing computation locally, without the need to continuously transmit large amounts of data to centralized servers for processing. This is advantageous in environments with limited or unreliable network connectivity, such as remote areas or IoT deployments.

- Enhanced Reliability: Edge AI enables devices to operate autonomously, even in offline or low-connectivity environments, ensuring continuous functionality and reliability. This is particularly useful in applications where internet connectivity may be intermittent or unavailable, such as in remote industrial sites or onboard autonomous vehicles.

The typically workflow of Edge AI:

- Data Acquisition: Edge devices collect data from their surroundings using sensors, cameras, or other input sources.

- On-Device Processing: The collected data undergoes preprocessing and analysis directly on the device using AI algorithms, without the need for external servers.

- Inference and Decision-Making: The AI model deployed on the device generates insights, predictions, or classifications based on the processed data.

- Action: The devie may take appropriate actions based on the output of the AI model, such as sending alerts, adjusting settings, or initiating control actions.

Overall, Edge AI represents a drastic shift in the deployment of AI technology, enabling smarter, more responsive devices with improved efficiency, privacy, and reliability. By harnessing the computational power of edge devices, Edge AI unlocks a wide range of applications across industries, from smart homes and cities to healthcare and transportation.

'

- How is this different from traditional AI?

Edge AI and traditional AI differ primarily in where and how they process data. Traditional AI relies on centralized servers or cloud computing for data processing, while Edge AI performs computation directly on edge devices, such as smartphones, sensors, or IoT devices, at the "edge" of the network where data is generated.

This decentralized approach significantly reduces latency in Edge AI, as data does not need to be transmitted to remote servers for processing, enabling faster responses to events and real-time decision-making. Additionally, Edge AI operates autonomously, even in offline or low-connectivity environments, ensuring continuous functionality and reliability, whereas traditional AI systems require constant connectivity to external servers.

Furthermore, Edge AI enhances privacy and security by keeping data local, minimizing the need to transmit sensitive information over networks. By processing data locally, Edge AI also reduces bandwidth usage, as only relevant insights or summaries are sent to the cloud, alleviating strain on network infrastructure.

Overall, Edge AI offers advantages in terms of reduced latency, improved privacy and security, enhanced reliability, and scalability compared to traditional AI systems, making it well-suited for applications where real-time decision-making, autonomy, and privacy are critical considerations.

- What are the benefits of edge AI?

Edge AI offers several benefits that make it a compelling approach for various applications:

Firstly, it reduces latency by processing data directly on edge devices, eliminating the need to send data to remote servers for analysis. This enables faster response times and real-time decision-making, crucial for applications like autonomous vehicles, industrial automation, and healthcare monitoring systems.

Secondly, Edge AI enhances privacy and security by keeping sensitive data local, minimizing the risk of unauthorized access or data breaches associated with transmitting data over networks to centralized servers. This is particularly important in scenarios where data confidentiality is paramount, such as personal health monitoring or surveillance systems.

Additionally, Edge AI improves reliability by enabling devices to operate autonomously, even in offline or low-connectivity environments, ensuring continuous functionality without reliance on internet connectivity. This reliability is essential for applications in remote areas, industrial settings, or onboard autonomous vehicles. Furthermore, Edge AI reduces bandwidth usage by processing data locally and sending only relevant insights or summaries to the cloud, alleviating strain on network infrastructure and minimizing costs associated with data transmission.

Moreover, Edge AI enhances scalability by enabling distributed processing across multiple edge devices, allowing for efficient deployment of AI algorithms at scale. This scalability is crucial for applications that require processing large volumes of data across a distributed network of devices, such as smart cities, agricultural monitoring systems, or retail analytics. Overall, the benefits of Edge AI, including reduced latency, improved privacy and security, enhanced reliability, reduced bandwidth usage, and scalability, make it a powerful and versatile approach for deploying AI algorithms in various edge computing scenarios, unlocking new possibilities for innovation and efficiency across industries.

- The several stages of edge AI:

The deployment of artificial intelligence (AI) at the edge involves several stages, each contributing to the efficient processing and analysis of data directly on edge devices. These stages enable edge devices to perform tasks such as data preprocessing, inference, and decision-making without relying on centralized servers or cloud computing. Let's delve into each stage:

- Data Acquisition: The first stage involves collecting data from the surrounding environment using sensors, cameras, microphones, or other input sources. This data can include images, videos, audio recordings, sensor readings, or any other relevant information.

- Data Preprocessing: Once the data is collected, it undergoes preprocessing to clean, normalize, or transform it into a suitable format for analysis. This preprocessing may involve tasks such as noise reduction, image enhancement, or feature extraction, depending on the specific application.

- On-Device Inference: After preprocessing, the data is fed into the AI model for inference, where the model analyzes the data and generates predictions, classifications, or other insights. Unlike traditional AI systems, where inference typically occurs on powerful servers in the cloud, edge AI performs inference directly on the device, leveraging specialized hardware accelerators like GPUs or dedicated AI chips.

- Decision-Making: Based on the output of the AI model, the edge device may make decisions or take actions in real-time. These decisions can range from simple responses, such as sending alerts or adjusting settings, to more complex actions, such as controlling autonomous vehicles or optimizing industrial processes.

- Feedback Loop: In some cases, edge AI systems incorporate a feedback loop where the output of the AI model influences subsequent data acquisition or processing. For example, in a smart surveillance system, suspicious activities detected by the AI model may trigger additional data collection or alert notifications.

- Optimization and Adaptation: Edge AI models are continuously optimized and adapted to ensure efficient use of computational resources and optimal performance. This optimization may involve techniques such as model quantization, pruning, or compression, which reduce the size and complexity of the model without significantly sacrificing performance.

- Local Execution: Edge AI local execution means processing data directly on edge devices like smartphones or IoT devices, without relying on external servers or cloud computing. This approach reduces latency, enabling faster responses and real-time decision-making. It enhances privacy and security by keeping sensitive data local, minimizing the risk of unauthorized access. Moreover, local execution ensures continuous functionality even in offline or low-connectivity environments, improving reliability. Overall, edge AI local execution enables smarter, more autonomous devices capable of performing intelligent tasks independently, making it ideal for applications where real-time processing, privacy, and reliability are essential.

- Contextualization: Edge AI contextualization involves analyzing data within the specific context of its environment or situation on edge devices. This means considering factors like location, time, and surrounding conditions to make more informed decisions. For example, a smart thermostat using edge AI contextualization may adjust temperature settings based on the time of day, occupancy, and outside weather conditions. By understanding the context in which data is collected, edge AI can provide more accurate and relevant insights, improving the effectiveness of applications such as smart homes, healthcare monitoring, and industrial automation.

- AI to AI communication: Edge AI to AI communication is when artificial intelligence algorithms on edge devices exchange information. This allows them to collaborate and work together to solve complex tasks efficiently. For instance, in a smart home, AI algorithms in different devices like thermostats, cameras, and appliances can communicate to optimize energy usage and enhance security. By sharing insights and coordinating actions locally, edge AI devices can adapt to changing conditions and improve their performance without relying on external servers or cloud services. This decentralized communication enables smarter, more autonomous edge computing systems capable of responding intelligently to their environment.

- AI-adapted choreography: Edge AI-adapted choreography involves using artificial intelligence algorithms on edge devices to create and adjust dance routines. These algorithms analyze music, movements, and environmental factors in real-time to synchronize dancers and adapt choreography dynamically. For example, in a performance, edge AI can adjust the tempo or style of the dance based on the audience's reactions or the dancers' movements. By leveraging local processing and communication between devices, Edge AI-adapted choreography enhances creativity, improvisation, and synchronization in dance performances, leading to more engaging and immersive experiences for both performers and audiences.

Overall, the different stages of AI at the edge enable edge devices to perform intelligent tasks and make decisions locally, without relying on external servers or cloud computing. This decentralized approach offers several advantages, including reduced latency, improved privacy and security, enhanced reliability, and scalability, making it well-suited for a wide range of applications across industries.

- Edge AI vs. distributed AI

Here's a comparison between Edge AI and distributed AI:

Edge AI:

- Processing occurs directly on edge devices like smartphones, sensors, or IoT devices.

- Reduces latency by eliminating the need to send data to centralized servers.

- Enhances privacy and security by keeping data local.

- Operates autonomously, even in offline or low-connectivity environments.

- Enables real-time decision-making and faster response times.

Distributed AI:

- Processing is distributed across multiple devices or servers, often connected via a network.

- Allows for parallel processing and scalability across a distributed network.

- May require significant network bandwidth for communication between nodes.

- Suitable for tasks requiring large-scale computation or collaboration between multiple AI models.

- Can involve coordination and synchronization between distributed nodes for optimal performance.

- Edge AI vs. cloud AI

Edge AI processes data directly on edge devices, like smartphones or sensors, reducing latency and enabling real-time decision-making without constant internet connectivity. Cloud AI, on the other hand, relies on centralized servers for data processing, requiring data transmission over the internet, which can lead to delays. Edge AI enhances privacy and security by keeping sensitive data local, while cloud AI may raise concerns about data privacy and security due to reliance on external servers. Edge AI is suitable for applications requiring quick responses and autonomy, while cloud AI is preferable for tasks needing vast computational resources and data analysis.

- Key differences between edge AI and cloud AI

Here are the key differences between Edge AI and Cloud AI:

Edge AI:

- Processing occurs directly on edge devices like smartphones, sensors, or IoT devices.

- Reduces latency by eliminating the need to send data to centralized servers.

- Enhances privacy and security by keeping data local.

- Operates autonomously, even in offline or low-connectivity environments.

- Suitable for applications requiring real-time decision-making and autonomy.

Cloud AI:

- Processing occurs on centralized servers in remote data centers.

- Relies on internet connectivity for data transmission and processing.

- May raise concerns about data privacy and security due to reliance on external servers.

- Enables scalability and access to vast computational resources.

- Suitable for applications requiring large-scale data analysis, machine learning training, and resource-intensive tasks.

Benefits of edge AI for end users

- Diminished latency

- Real-time analytics

- Data privacy

- Scalability

- Reduced costs

Edge AI use cases by industry

- Healthcare

- Manufacturing

- Retail

- Smart homes

- Security and surveillance

- Edge AI products etc.

For more info visit www.proxpc.com

Edge Computing Products

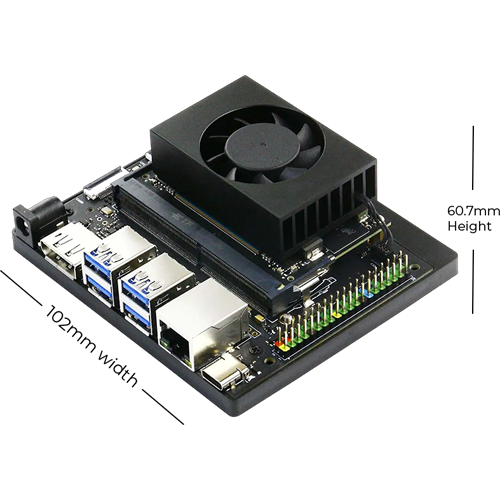

ProX Micro Edge Orin Developer Kit

Learn More

ProX Micro Edge Orin NX

Learn More

ProX Micro Edge Orin Nano

Learn More

ProX Micro Edge AGX Orin

Share this: