For Professionals, By Professionals

Discover ProX PC for best custom-built PCs, powerful workstations, and GPU servers in India. Perfect for creators, professionals, and businesses. Shop now!

SERVICES

WE ACCEPT

In recent years, there has been a remarkable boost in the development of AI-specific processors. These processors are designed to efficiently handle the complex computations involved in artificial intelligence tasks. Two prominent types of AI-specific processors are TPUs (Tensor Processing Units) and NPUs (Neural Processing Units). In this article, we will go through the evolution, functionality, and significance of these processors in the rapidly advancing field of artificial intelligence.

Introduction:

Artificial intelligence (AI) has become an integral part of various applications, ranging from virtual assistants to autonomous vehicles. The processing power required for AI tasks is immense, often surpassing the capabilities of traditional central processing units (CPUs) and graphics processing units (GPUs). To address this challenge, AI-specific processors have emerged, tailored to efficiently execute the intricate computations involved in AI algorithms.

Evolution of AI-specific Processors:

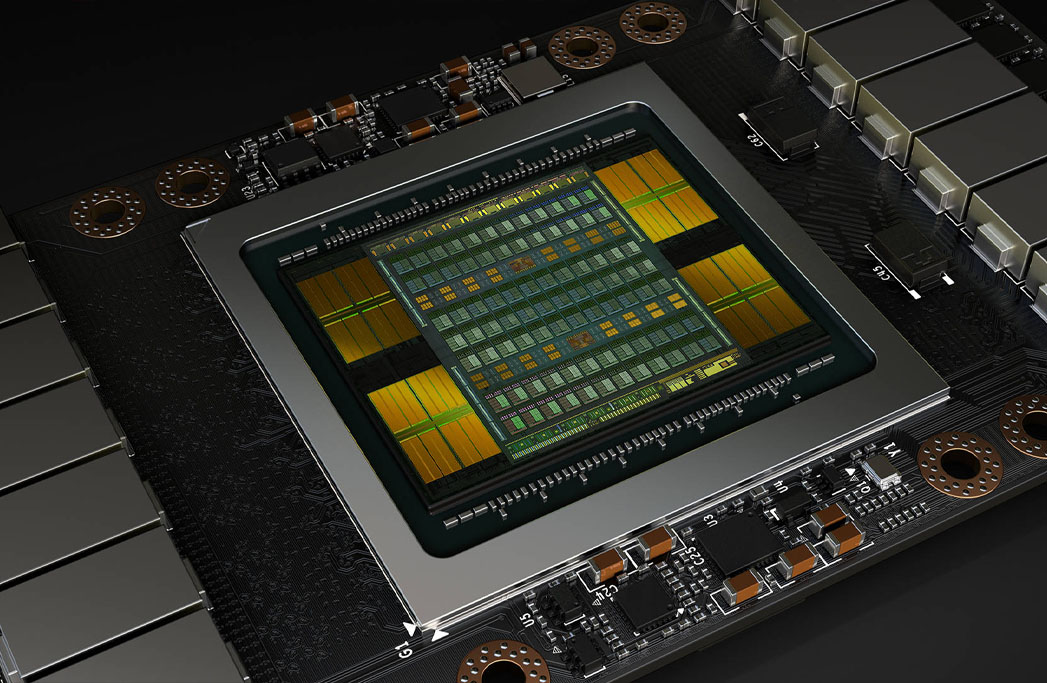

The journey of AI-specific processors began with the development of GPUs, which demonstrated superior performance in certain AI tasks due to their parallel processing capabilities. However, as AI algorithms evolved, there arose a need for processors optimized specifically for tensor operations, which are fundamental to many AI models.

Tensor Processing Units (TPUs):

Tensor Processing Units, or TPUs, are a type of AI-specific processor developed by Google. These processors excel at accelerating machine learning workloads, particularly those based on deep neural networks. TPUs are highly optimized for tensor operations, making them significantly faster and more energy-efficient than traditional CPUs and GPUs for AI tasks.

Functionality of TPUs:

TPUs are designed to efficiently perform matrix multiplication, which lies at the heart of many neural network computations. Their architecture is optimized for processing large batches of data in parallel, enabling rapid training and inference in AI models. TPUs are integrated into Google's cloud infrastructure, providing users with access to high-performance computing resources for AI tasks.

Applications of TPUs:

TPUs find applications in various domains, including image recognition, natural language processing, and reinforcement learning. They power many Google services, such as Google Search, Google Photos, and Google Translate, enabling fast and accurate AI-driven experiences for users worldwide. Additionally, TPUs are used by researchers and developers to accelerate the training of deep learning models and explore new frontiers in AI research.

Neural Processing Units (NPUs):

Neural Processing Units, or NPUs, are another type of AI-specific processor designed to accelerate neural network computations. Unlike TPUs, which are primarily developed by Google, NPUs are produced by a variety of companies, including hardware manufacturers and semiconductor companies.

Functionality of NPUs:

NPUs are optimized for executing neural network algorithms with high efficiency and performance. They often feature specialized hardware accelerators for tasks such as convolutional operations, which are prevalent in computer vision applications. NPUs are integrated into a wide range of devices, including smartphones, IoT (Internet of Things) devices, and edge computing systems, enabling on-device AI processing with minimal latency.

Applications of NPUs:

NPUs are widely used in consumer electronics for tasks such as image recognition, speech recognition, and gesture recognition. They enable features such as facial recognition for unlocking smartphones, voice assistants for hands-free interaction, and object detection for augmented reality applications. NPUs also play a crucial role in edge computing scenarios, where AI processing is performed locally on devices rather than relying on cloud servers.

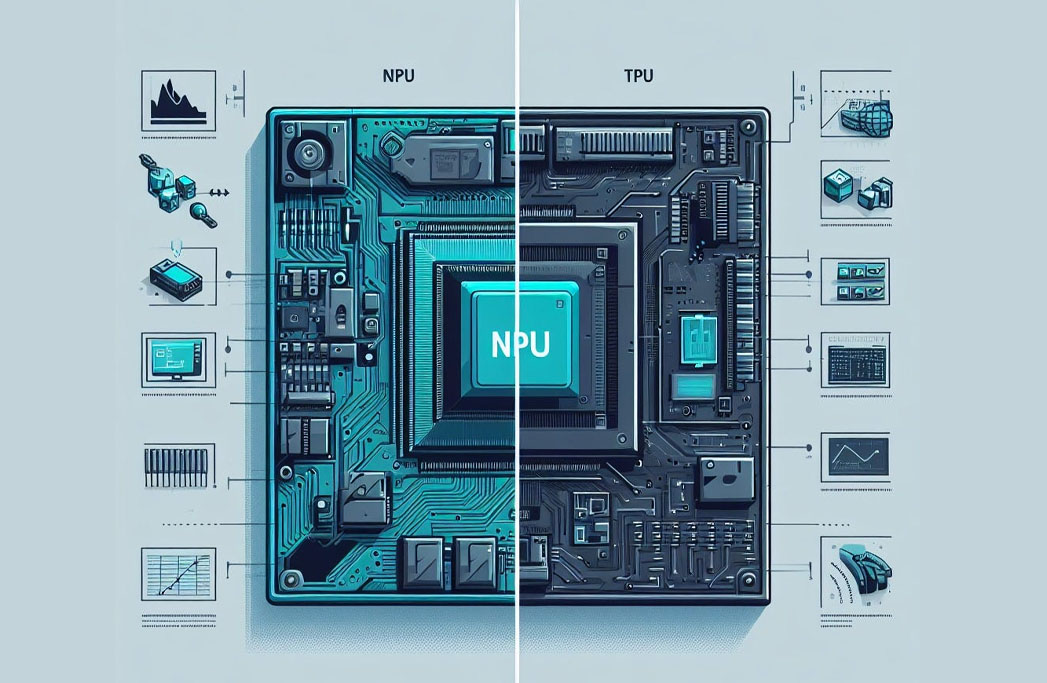

Comparison between TPUs and NPUs:

While both TPUs and NPUs are designed to accelerate AI computations, they exhibit differences in architecture, target applications, and deployment scenarios. TPUs are optimized for large-scale AI workloads in cloud environments, offering high performance and scalability for training and inference tasks. On the other hand, NPUs are tailored for on-device AI processing in edge and mobile devices, prioritizing energy efficiency and low latency.

Future Outlook:

The rapid advancement of AI-specific processors is expected to continue in the coming years, driven by increasing demand for AI capabilities across various industries. Research efforts are focused on developing even more efficient and specialized processors for specific AI tasks, such as natural language processing, reinforcement learning, and generative modeling. Additionally, advancements in chip design, manufacturing technologies, and software optimization techniques will further enhance the performance and capabilities of AI-specific processors.

Conclusion:

AI-specific processors, such as TPUs and NPUs, play a critical role in accelerating the adoption of artificial intelligence across diverse applications. These processors enable efficient execution of complex AI algorithms, leading to faster training times, lower inference latency, and enhanced user experiences. As the field of AI continues to evolve, the development of specialized processors will remain pivotal in pushing the boundaries of what is possible in artificial intelligence.

For more info visit www.proxpc.com

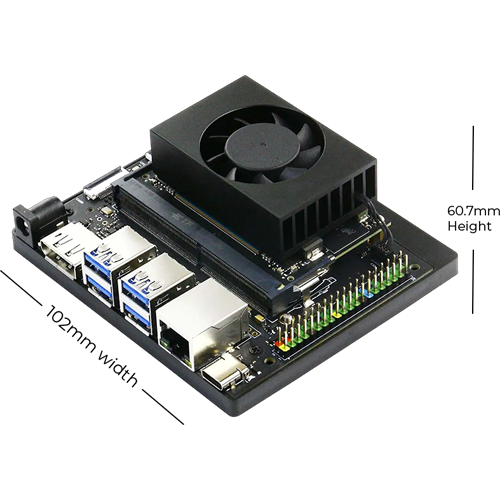

Edge Computing Products

ProX Micro Edge Orin Developer Kit

Learn More

ProX Micro Edge Orin NX

Learn More

ProX Micro Edge Orin Nano

Learn More

ProX Micro Edge AGX Orin

Share this: