Contents

-

Applications of Computer Al Vision Projects

-

Computer Vision Applications in Manufacturing

-

Computer Vision in Healthcare

-

Computer Vision in Agriculture

-

Computer Vision in Transportation

-

Computer Vision in Retail

-

Computer Vision in Sports

-

Al Vision Industry Guides and Applications of Computer Vision Projects

-

Get Started With Enterprise Computer Al Vision Algorithms and Applications

This article covers an extensive list of novel, valuable computer vision applications across all industries. Find the best computer vision projects, computer vision ideas, and high-value use cases in the market right now.

In this article, we will cover the following:

-

Manufacturing

-

Healthcare

-

Agriculture

-

Transportation

-

Sports

About us: ProX PC provides the world’s only end-to-end Computer Vision Platform. The technology enables global organizations to develop, deploy, and scale all computer vision applications in one place. Get a demo.

Applications of Computer AI Vision Projects

What is Computer Vision?

The field of computer vision is a sector of Artificial Intelligence (AI) that uses Machine Learning and Deep Learning to enable computers to see, perform AI pattern recognition, and analyze objects in photos and videos in the same way that people do. Computational vision is rapidly gaining popularity for automated AI vision inspection, remote monitoring, and automation.

Computer Vision work has a massive impact on companies across industries, from retail to security, healthcare, construction, automotive, manufacturing, logistics, and agriculture.

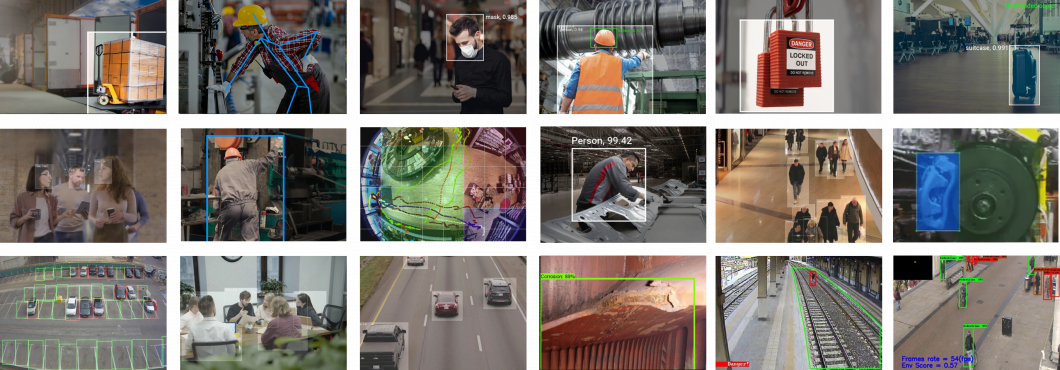

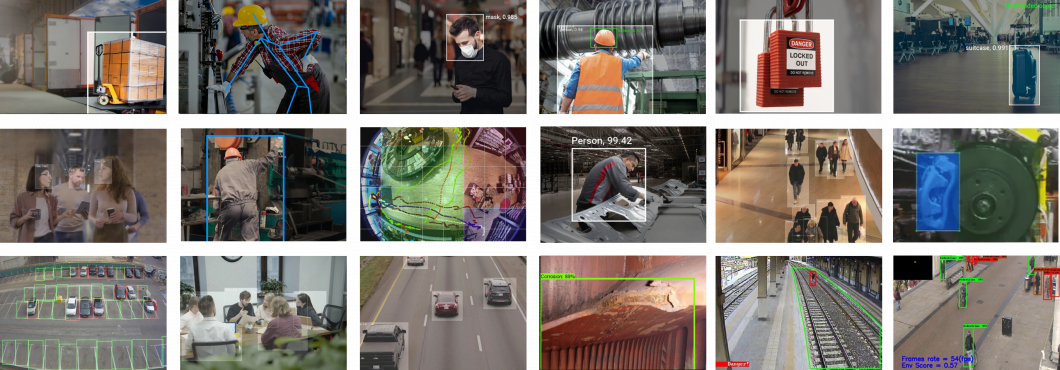

Custom Computer Vision applications built with ProX PC

Computer Vision Systems

Computer vision systems use (1) cameras to obtain visual data, (2) machine learning models for processing the images, and (3) conditional logic to automate application-specific use cases. The deployment of artificial intelligence to

edge devices, so-called edge intelligence, facilitates the implementation of scalable, efficient, robust, secure, and private implementations of computer vision.

At ProX PC, we provide the world’s only end-to-end computer vision platform. The solution helps leading organizations develop, deploy, scale, and secure their computer vision applications in one place.

Computer Vision Applications in Manufacturing

Read our complete manufacturing industry report here. In manufacturing, image recognition is applied for AI vision inspection, quality control, remote monitoring, and system automation.

Productivity Analytics

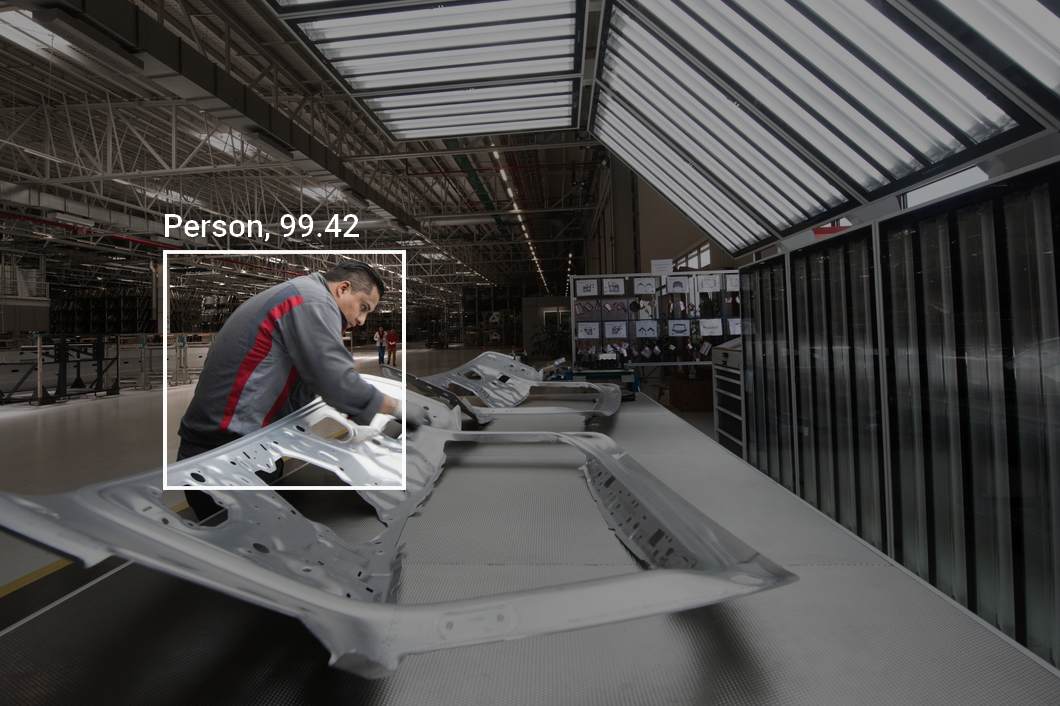

Productivity analytics track the impact of workplace change, how employees spend their time and resources, and implement various tools. Such data can provide valuable insight into time management, workplace collaboration, and employee productivity. Computer Vision lean management strategies aim to objectively quantify and assess processes with cameras-based vision systems.

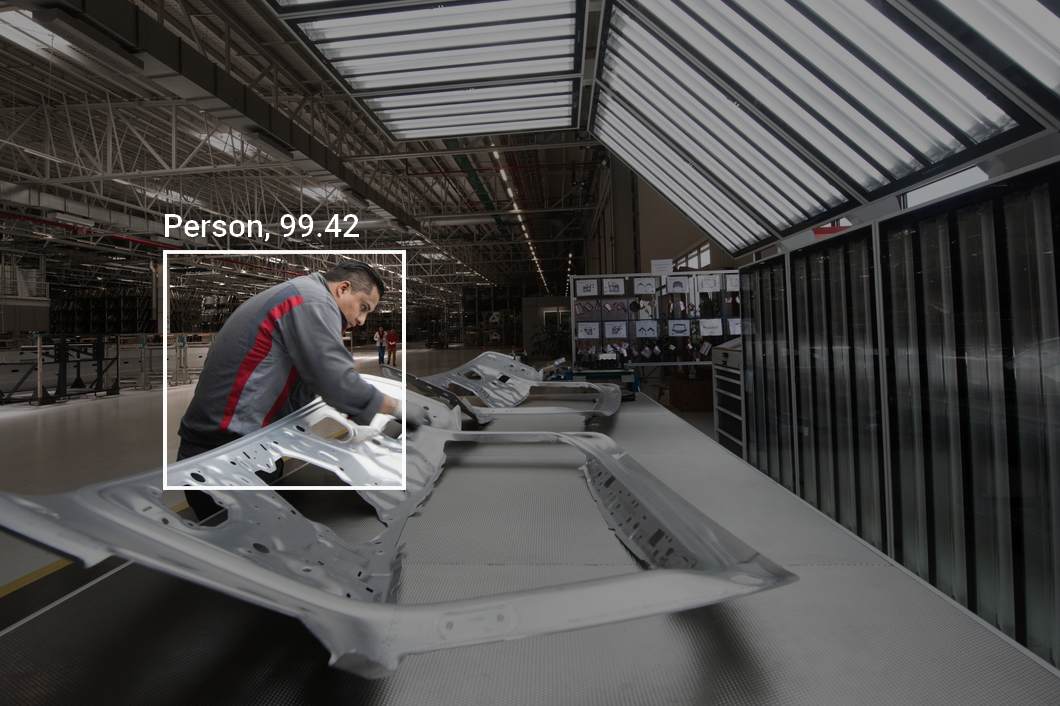

Privacy-preserving computer vision in industrial manufacturing.

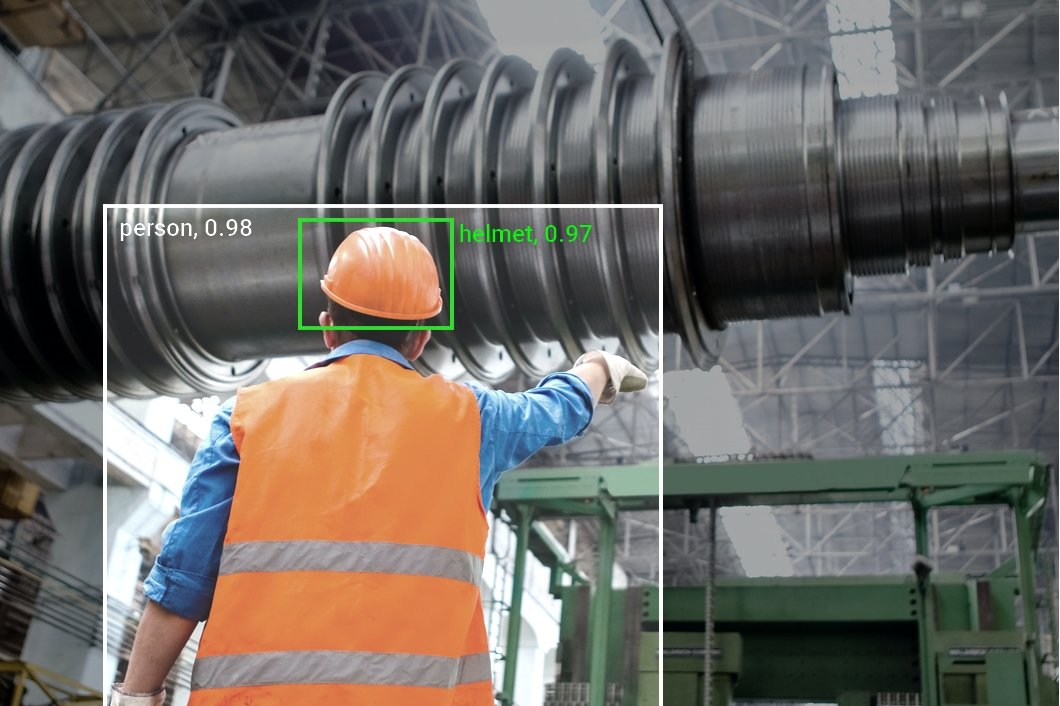

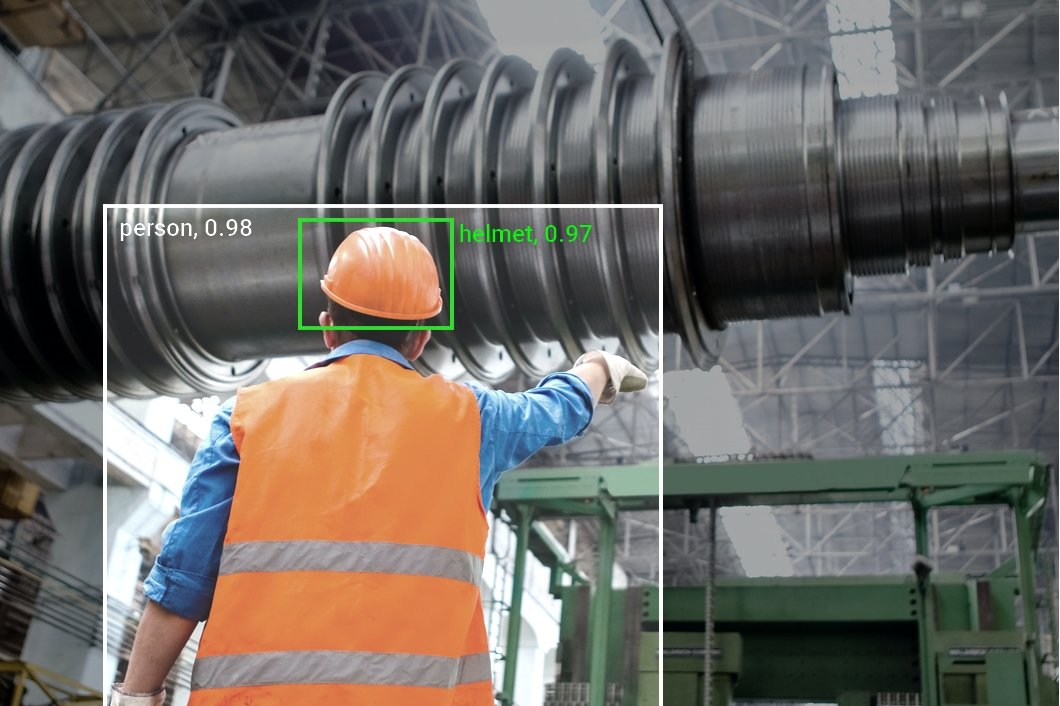

Visual Inspection of Equipment

Computer vision for visual inspection is a key strategy in smart manufacturing. Vision-based inspection systems are also gaining in popularity for automated inspection of Personal Protective Equipment (PPE), such as Mask Detection or Helmet Detection. Computational vision helps to monitor adherence to safety protocols on construction sites or in a smart factory.

Computer Vision for personal protective equipment detection

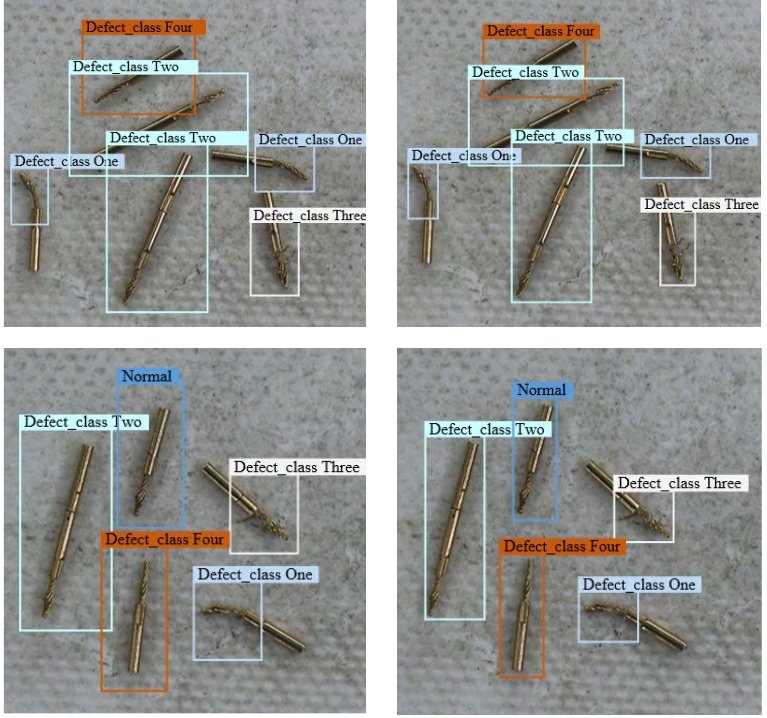

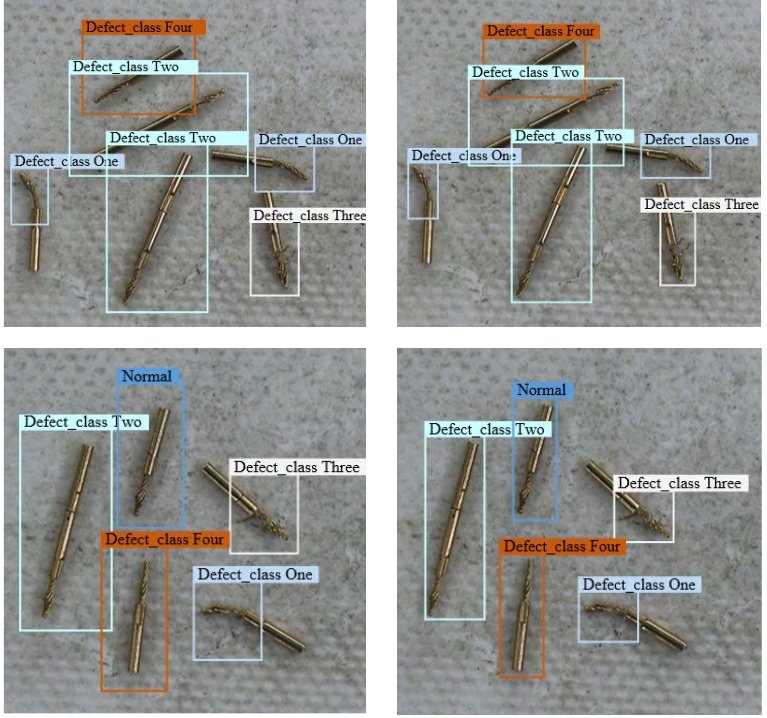

Quality Management

Smart camera applications provide a scalable method to implement automated visual inspection and quality control of production processes and assembly lines in smart factories. Hereby, deep learning uses real-time object detection to provide superior results (detection accuracy, speed, objectiveness, reliability) compared to laborious manual inspection.

Compared to traditional machine vision systems, AI vision inspection uses machine learning methods that are highly robust and don’t require expensive special cameras and inflexible settings. Therefore, AI vision methods are very scalable across multiple locations and factories.

Visual inspection for defect part detection in manufacturing

Skill Training

Another application field of vision systems is optimizing assembly line operations in industrial production and human-robot interaction. The evaluation of human action can help construct standardized action models related to different operation steps and evaluate the performance of trained workers.

Automatically assessing the action quality of workers can be beneficial by improving working performance, promoting productive efficiency (LEAN optimization), and, more importantly, discovering dangerous actions to lower accident rates.

Computer Vision in Healthcare

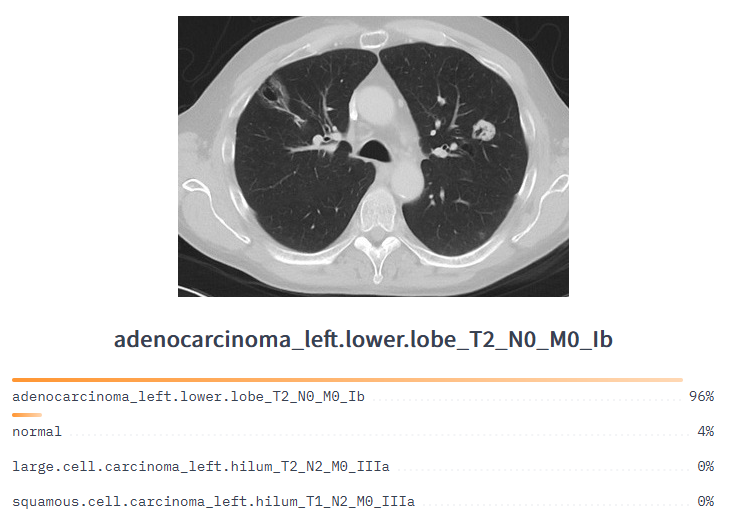

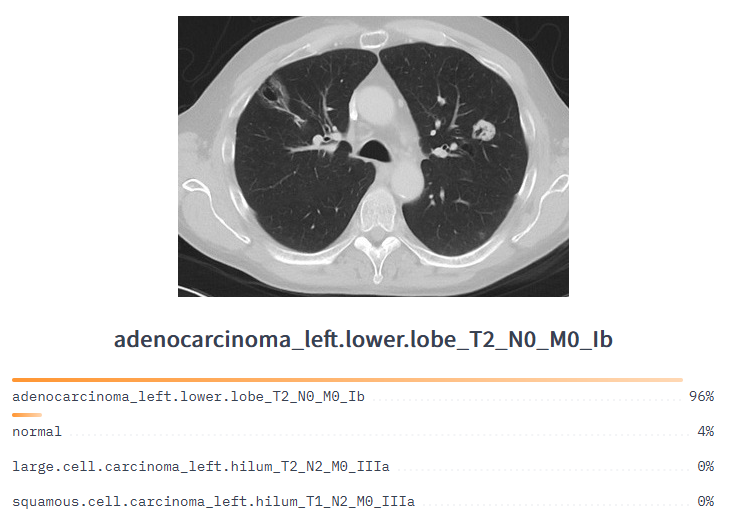

Cancer Detection

Machine learning is incorporated into medical industries for purposes such as breast and skin cancer detection. For instance, image recognition allows scientists to detect slight differences between cancerous and non-cancerous images and diagnose data from magnetic resonance imaging (MRI) scans and inputted photos as malignant or benign.

COVID-19 diagnosis

Computer Vision can be used for coronavirus control. Multiple deep-learning computer vision models exist for x-ray-based COVID-19 diagnosis. The most popular one for detecting COVID-19 cases with digital chest x-ray radiography (CXR) images is named COVID-Net and was developed by Darwin AI, Canada.

Cell Classification

Machine Learning in medical use cases was used to classify T-lymphocytes against colon cancer epithelial cells with high accuracy. Thus, ML is expected to significantly accelerate the process of disease identification regarding colon cancer efficiently and at little to no cost post-creation.

Movement Analysis

Neurological and musculoskeletal diseases such as oncoming strokes, balance, and gait problems can be detected using deep learning models and computer vision even without doctor analysis. Pose Estimation computer vision applications that analyze patient movement assist doctors in diagnosing a patient with ease and increased accuracy.

Mask Detection

Masked Face Recognition is used to detect the use of masks and protective equipment to limit the spread of coronavirus. Likewise, computer Vision systems help countries implement masks as a control strategy to contain the spread of coronavirus disease.

For this reason, private companies such as Uber have created computer vision features such as face detection to be implemented in their mobile apps to detect whether passengers are wearing masks or not. Programs like this make public transportation safer during the coronavirus pandemic.

Camera-based mask detection

Tumor Detection

Brain tumors can be seen in MRI scans and are often detected using deep neural networks. Tumor detection software utilizing deep learning is crucial to the medical industry because it can detect tumors at high accuracy to help doctors make their diagnoses.

New methods are constantly being developed to heighten the accuracy of these diagnoses.

Lung cancer classification model to analyze CT medical imaging

Disease Progression Score

Computer vision can be used to identify critically ill patients to direct medical attention (critical patient screening). People infected with COVID-19 are found to have more rapid respiration.

Deep Learning with depth cameras can be used to identify abnormal respiratory patterns to perform an accurate and unobtrusive yet large-scale screening of people infected with the COVID-19 virus.

Healthcare and rehabilitation

Physical therapy is important for the recovery training of stroke survivors and sports injury patients. The main challenges are related to the costs of supervision by a medical professional, hospital, or agency.

Home training with a vision-based rehabilitation application is preferred because it allows people to practice movement training privately and economically. In computer- aided therapy or rehabilitation, human action evaluation can be applied to assist patients in training at home, guide them to perform actions properly and prevent further injuries. Explore more sports and fitness applications.

Medical Skill Training

Computer Vision applications are used for assessing the skill level of expert learners on self-learning platforms. For example, augmented reality simulation-based surgical training platforms have been developed for surgical education.

In addition, the technique of action quality assessment makes it possible to develop computational approaches that automatically evaluate the surgical students performance. Accordingly, meaningful feedback information can be provided to individuals and guide them to improve their skill levels.

Computer Vision in Agriculture

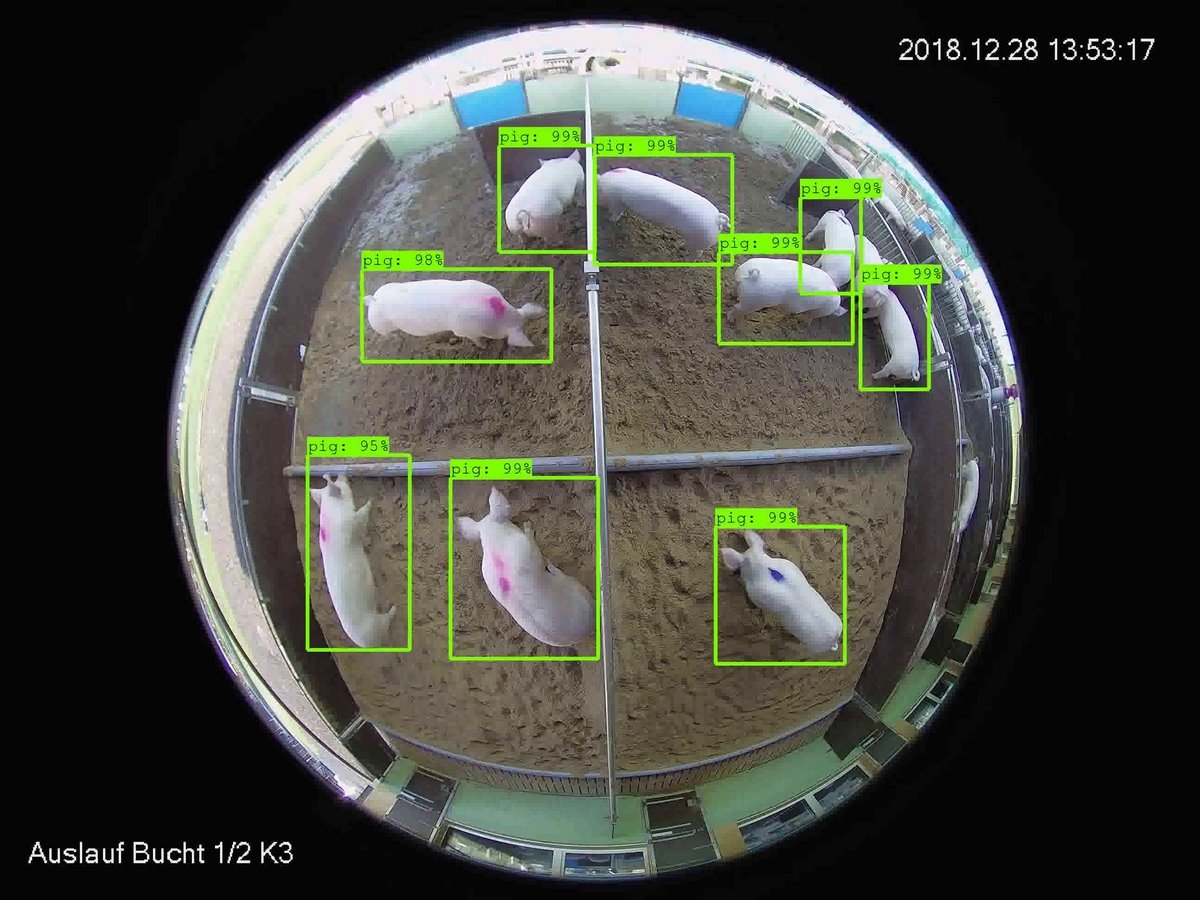

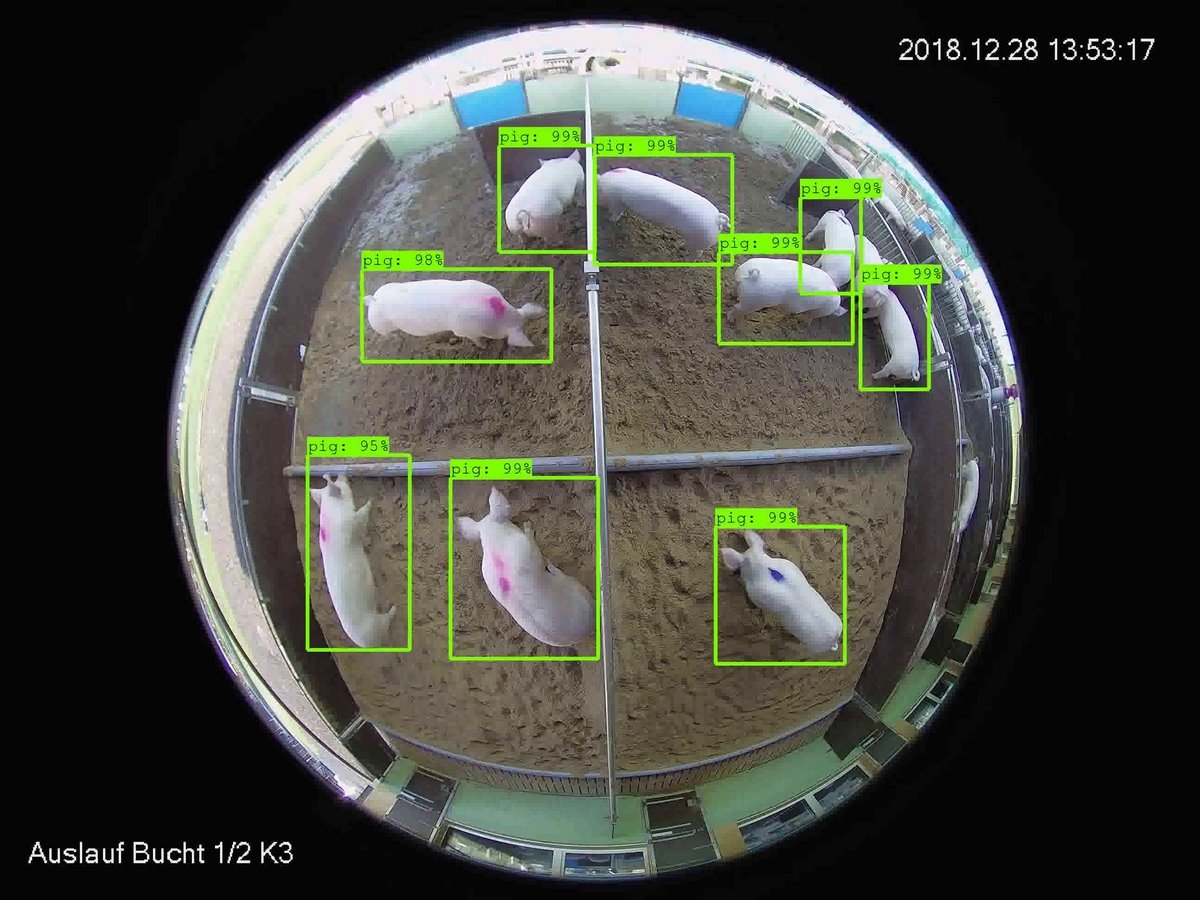

Animal Monitoring

Animal monitoring with computer vision is a key strategy of smart farming. Machine learning uses camera streams to monitor the health of specific livestock such as pigs, cattle, or poultry. Smart vision systems aim to analyze animal behavior to increase productivity, health, and welfare of the animals and thereby influence yields and economic benefits in the industry.

Animal monitoring system for smart farming

Farm Automation

Technologies such as harvest, seeding, and weeding robots, autonomous tractors, and vision systems to monitor remote farms, and drones for visual inspection can maximize productivity with labor shortages. The profitability can be significantly increased by automating manual inspection with Al vision, reducing the ecological footprint, and improving decision-making processes.

Agriculture Computer Vision Application for Animal Monitoring

Crop Monitoring

The yield and quality of important crops such as rice and wheat determine the stability of food security. Traditionally, crop growth monitoring mainly relies on subjective human Judgment and is not timely or accurate.

Computer Vision applications allow us to continuously and non-destructively monitor plant growth and the response to nutrient requirements.

Compared with manual operations, the real-time monitoring of crop growth by applying computer vision technology can detect the subtle changes in crops due to malnutrition much earlier and can provide a reliable and accurate basis for timely regulation.

In addition, computer vision applications can be used to measure plant growth indicators or determine the growth stage.

Flowering Detection

The heading date of wheat is one of the most important parameters for wheat crops. An automatic computer vision observation system can be used to determine the wheat heading period.

Computer vision technology has the advantages of low cost, small error, high efficiency, and good robustness and can be dynamically and continuously analyzed.

Plantation Monitoring

In intelligent agriculture, Image processing with drone images can be used to monitor palm oil plantations remotely. With geospatial orthophotos, it is possible to identify which part of the plantation land is fertile for planted crops.

It was also possible to identify areas less fertile in terms of growth and parts of plantation fields that were not growing at all. OpenCV is a popular tool for such image- processing tasks.

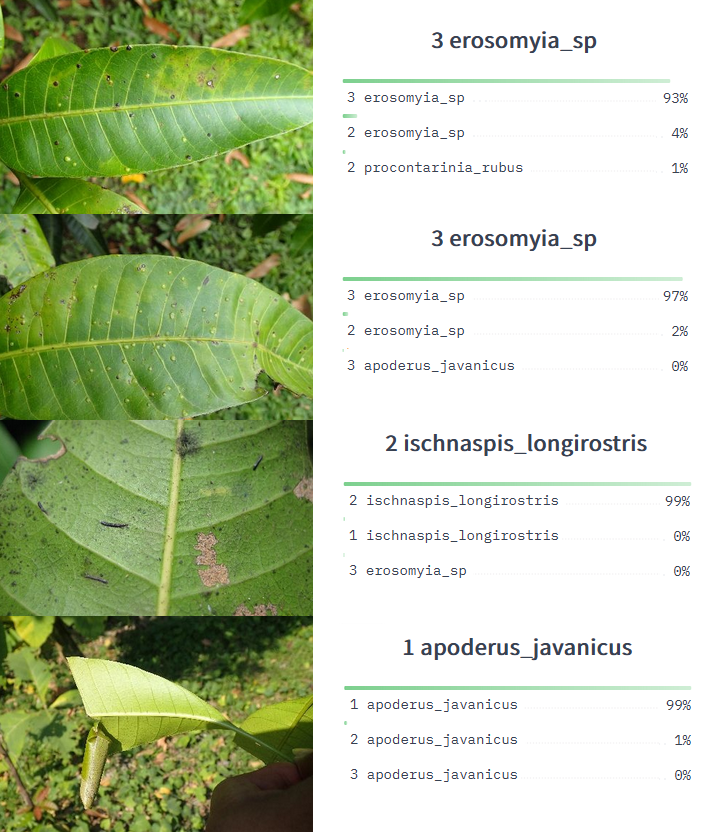

Insect Detection

Rapid and accurate recognition and counting of flying insects are of great importance, especially for pest control. However, traditional manual identification and counting of flying insects are inefficient and labor-intensive. Vision-based systems allow the counting and recognizing of flying insects (based on You Only Look Once (YOLO) object detection and classification).

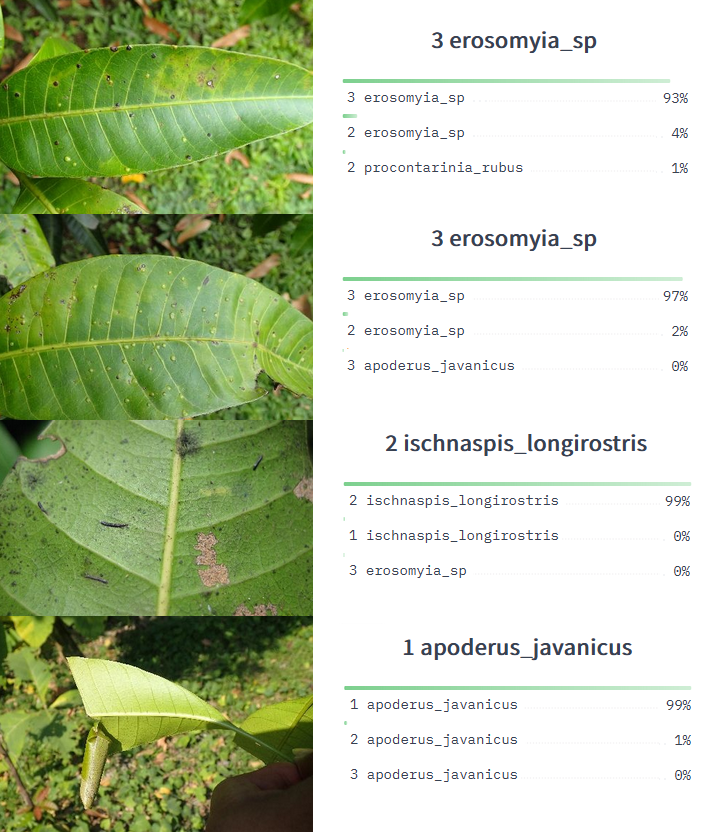

Plant Disease Detection

Automatic and accurate estimation of disease severity is essential for food security, disease management, and yield loss prediction. The deep learning method avoids labor- intensive feature engineering and threshold-based image segmentation.

Automatic image-based plant disease severity estimation using Deep convolutional neural network (CNN) applications was developed, for example, to identify apple black rot.

Computer Vision Application for mango plant disease classification in agriculture

Automatic weeding

Weeds are considered to be harmful plants in agronomy because they compete with crops to obtain water, minerals, and other nutrients in the soil. Spraying pesticides only in the exact locations of weeds greatly reduces the risk of contaminating crops, humans, animals, and water resources.

The intelligent detection and removal of weeds are critical to the development of agriculture. A neural network-based computer vision system can be used to identify potato plants and three different weeds for on-site specific spraying.

Automatic Harvesting

In traditional agriculture, mechanical operations are reliant, on manual harvesting as the mainstay, which results in high costs and low efficiency. However, in recent years, with the continuous application of computer vision technology, high-end intelligent agricultural harvesting machines, such as harvesting machinery and picking robots based on computer vision technology, have emerged in agricultural production, which has been a new step in the automatic harvesting of crops.

The main focus of harvesting operations is to ensure product quality during harvesting to maximize the market value. Computer Vision-powered applications include picking cucumbers automatically in a greenhouse environment or the automatic identification of cherries in a natural environment.

Agricultural Product Quality Testing

The quality of agricultural products is one of the important factors affecting market prices and customer satisfaction. Compared to manual inspections, Computer Vision provides a way to perform external quality checks.

AI vision systems can achieve high degrees of flexibility and repeatability at a relatively low cost and with high precision. For example, systems based on machine vision and computer vision are used for rapid testing of sweet lemon damage or non-destructive quality evaluation of potatoes.

Irrigation Management

Soil management based on using technology to enhance soil productivity through cultivation, fertilization, or irrigation has a notable impact on modern agricultural production. By obtaining useful information about the growth of horticultural crops through images, the soil water balance can be accurately estimated to achieve accurate irrigation planning.

Computer vision applications provide valuable information about the irrigation management water balance. A vision-based system can process multi-spectral images taken by unmanned aerial vehicles (UAVs) and obtain the vegetation index (VI) to provide decision support for irrigation management.

UAV Farmland Monitoring

Real-time farmland information and an accurate understanding of that information play a basic role in precision agriculture. Over recent years, drones (UAV), as a rapidly advancing technology, have allowed the acquisition of agricultural information that has a high resolution, low cost, and fast solutions.

In addition, UAV platforms equipped with image sensors provide detailed information on agricultural economics and crop conditions (for example, continuous crop monitoring). As a result, UAV remote sensing has contributed to an increase in agricultural production with a decrease in agricultural costs.

Yield Assessment

Through the application of computer vision technology, the functions of soil management, maturity detection, and yield estimation for farms have been realized. Moreover, the existing technology can be well applied to methods such as spectral analysis and deep learning.

Most of these methods have the advantages of high precision, low cost, good portability, good integration, and scalability and can provide reliable support for management decision-making. An example is the estimation of citrus crop yield via fruit detection and counting using computer vision.

Also, the yield from sugarcane fields can be predicted by processing images obtained using UAVs.

Computer Vision in Transportation

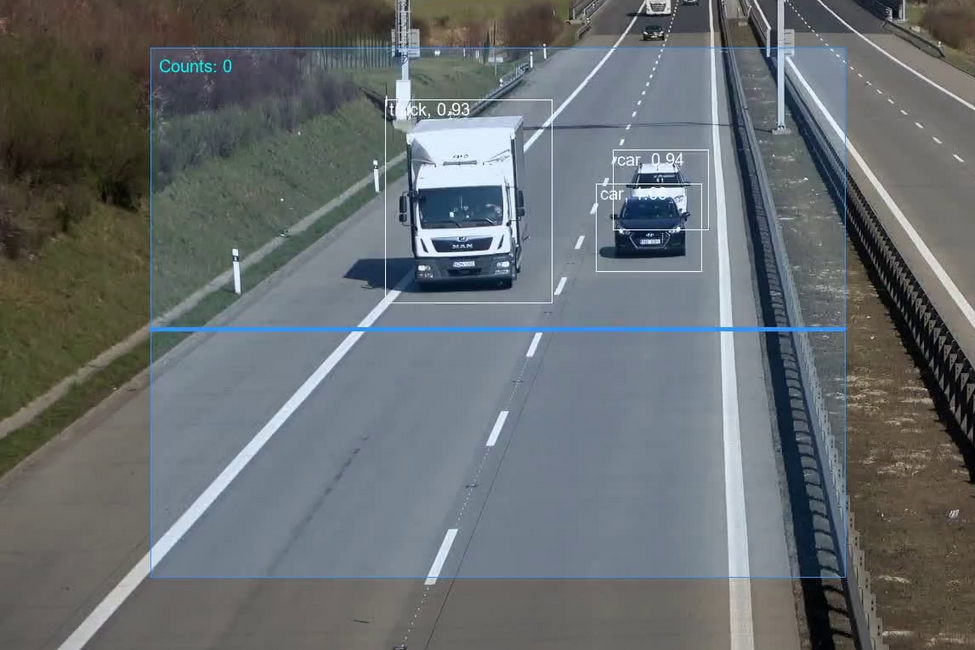

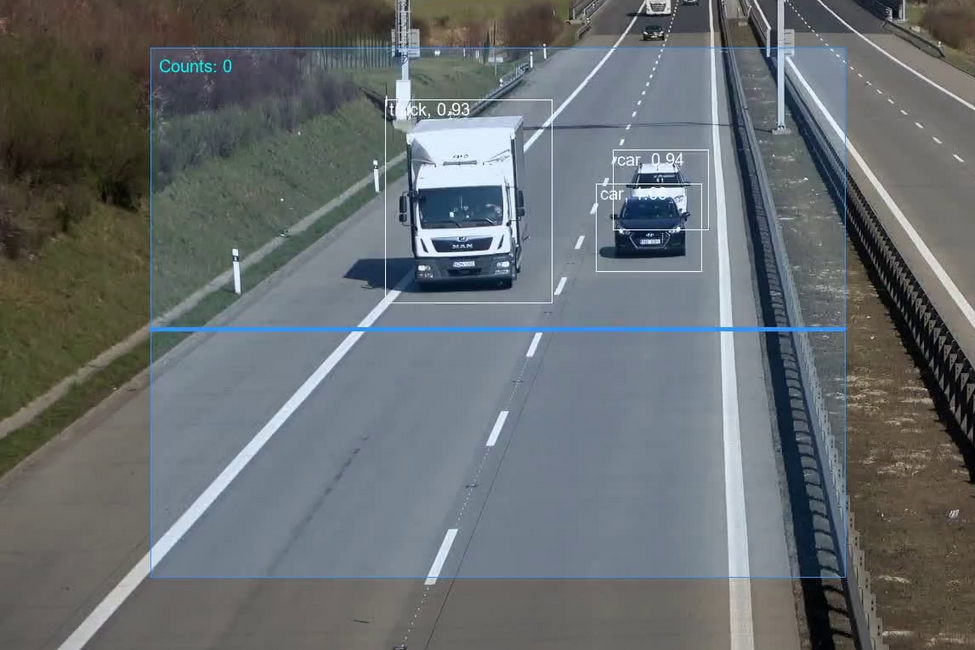

Vehicle Classification

Computer Vision applications for automated vehicle classification have a long history. The technologies for automated vehicle classification for vehicle counting have been evolving over the decades. Deep learning methods make it possible to implement large-scale traffic analysis systems using common, inexpensive security cameras.

With rapidly growing affordable sensors such as closed‐circuit television (CCTV) cameras, light detection and ranging (LiDAR), and even thermal imaging devices, vehicles can be detected, tracked, and categorized in multiple lanes simultaneously. The accuracy of vehicle classification can be improved by combining multiple sensors such as thermal imaging, and LiDAR imaging with RGB cameras (common surveillance, IP cameras).

In addition, there are multiple specializations; for example, a deep-learning-based computer vision solution for construction vehicle detection has been employed for purposes such as safety monitoring, productivity assessment, and managerial decision-making.

Vehicle detection and counting using object detection and classification

Moving Violations Detection

Law enforcement agencies and municipalities are increasing the deployment of camera‐based roadway monitoring systems to reduce unsafe driving behavior. Probably the most critical application is the detection of stopped vehicles in dangerous areas.

Also, there is increasing use of computer vision techniques in smart cities that involve automating the detection of violations such as speeding, running red lights or stop signs, wrong‐way driving, and making illegal turns.

Traffic Flow Analysis

Traffic flow analysis has been studied extensively for intelligent transportation systems (ITS) using invasive methods (tags, under-pavement coils, etc.) and non-invasive methods such as cameras.

With the rise of computer vision and AI, video analytics can now be applied to ubiquitous traffic cameras, which can generate a vast impact in ITS and smart cities. The traffic flow can be observed using computer vision means and measure some of the variables required by traffic engineers.

Parking Occupancy Detection

Visual parking space monitoring is used with the goal of parking lot occupancy detection. Especially in smart cities, computer vision applications power decentralized and efficient solutions for visual parking lot occupancy detection based on a deep Convolutional Neural Network (CNN).

There exist multiple datasets for parking lot detection, such as PKLot and CNRPark-EXT. Furthermore, video-based parking management systems have been implemented using stereoscopic imaging (3D) or thermal cameras. The advantage of camera-based parking lot detection is the scalability for large-scale use, inexpensive maintenance, and installation, especially since it is possible to reuse security cameras.

Vision-based parking lot occupancy detection

Automated License Plate Recognition (ALPR)

Many modern transportation and public safety systems rely on recognizing and extracting license plate information from still images or videos. Automated license plate recognition (ALPR) has in many ways transformed the public safety and transportation industries.

Such number plate recognition systems enable modern tolled roadway solutions, providing tremendous operational cost savings via automation and even enabling completely new capabilities in the marketplace (such as police cruiser‐mounted license plate reading units).

OpenALPR is a popular automatic number-plate recognition library based on optical character recognition (OCR) on images or video feeds of vehicle registration plates.

Vehicle re-identification

With improvements in person re-identification, smart transportation, and surveillance systems aim to replicate this approach for vehicles using vision-based vehicle re-identification. Conventional methods to provide a unique vehicle ID are usually intrusive (in-vehicle tag, cellular phone, or GPS).

For controlled settings such as at a toll booth, automatic number-plate recognition (ANPR) is probably the most suitable technology for the accurate identification of individual vehicles. However, license plates are subject to change and forgery, and ALPR cannot reflect salient specialties of the vehicles, such as marks or dents.

Non-intrusive methods such as image-based recognition have high potential and demand but are still far from mature for practical usage. Most existing vision-based vehicle re-identification techniques are based on vehicle appearances such as color, texture, and shape.

Today, the recognition of subtle, distinctive features such as vehicle make or year model is still an unresolved challenge.

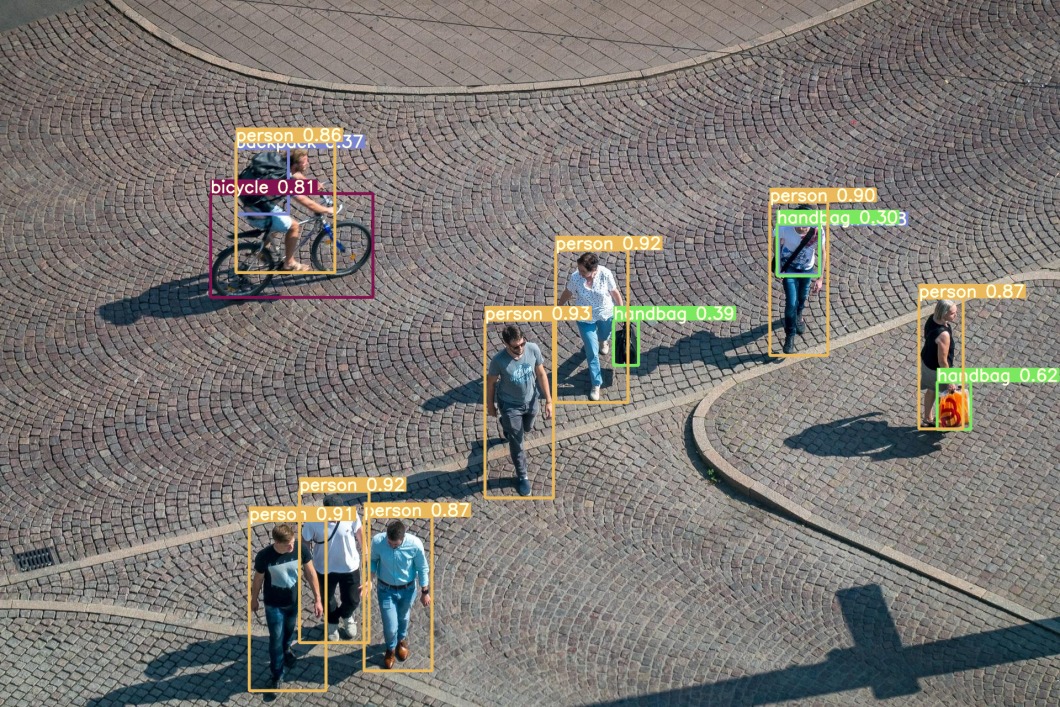

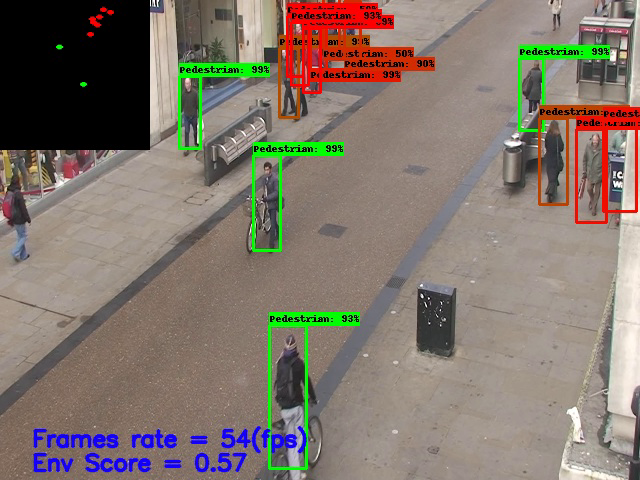

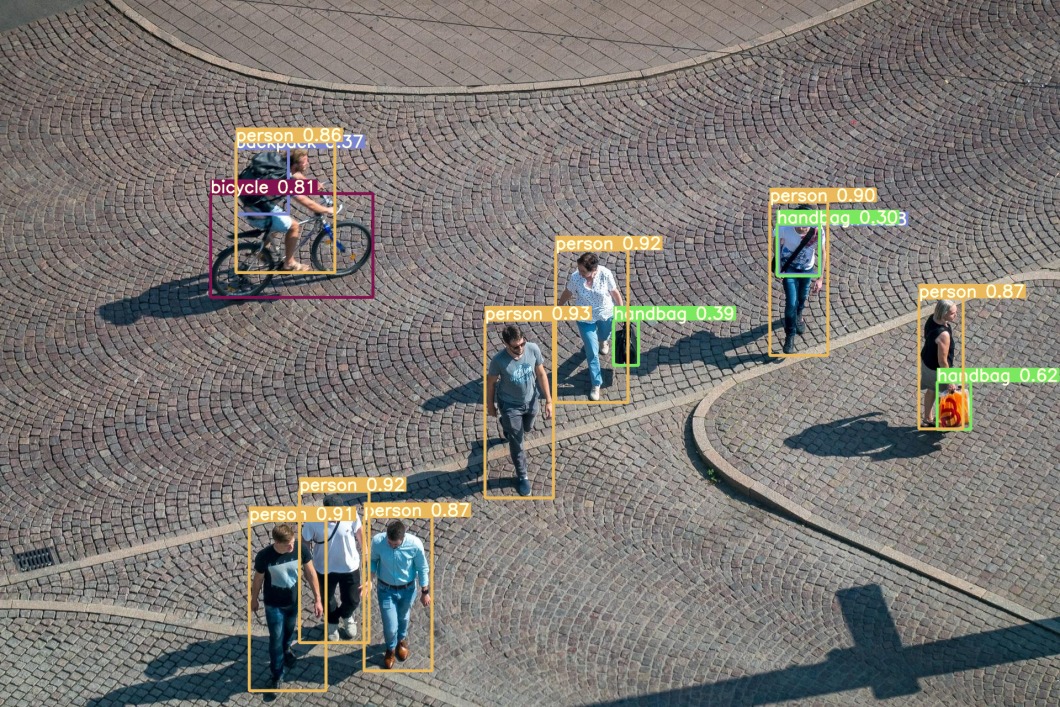

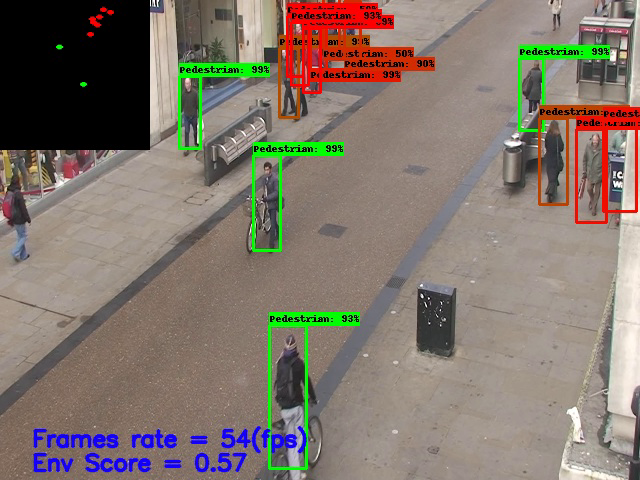

Pedestrian Detection

The detection of pedestrians is crucial to intelligent transportation systems (ITS). Use cases range from self-driving cars to infrastructure surveillance, traffic management, transit safety and efficiency, and law enforcement.

Pedestrian detection involves many types of sensors, such as traditional CCTV or IP cameras, thermal imaging devices, near‐infrared imaging devices, and onboard RGB cameras. A person detection algorithm, or people detector, can be based on infrared signatures, shape features, gradient features, machine learning, or motion features.

Pedestrian detection relying on deep convolution neural networks (CNN) has made significant progress, even with the detection of heavily occluded pedestrians.

Pedestrian detection with YOLOv7

Traffic Sign Detection

Computer Vision applications are used for traffic sign detection and recognition. Vision techniques are applied to segment traffic signs from different traffic scenes (using image segmentation) and employ deep learning algorithms to recognize and classify traffic signs.

Collision Avoidance Systems

Vehicle detection and lane detection form an integral part of most autonomous vehicle advanced driver assistance systems (ADAS). Deep neural networks have been used recently to investigate deep learning and its use for autonomous collision avoidance systems.

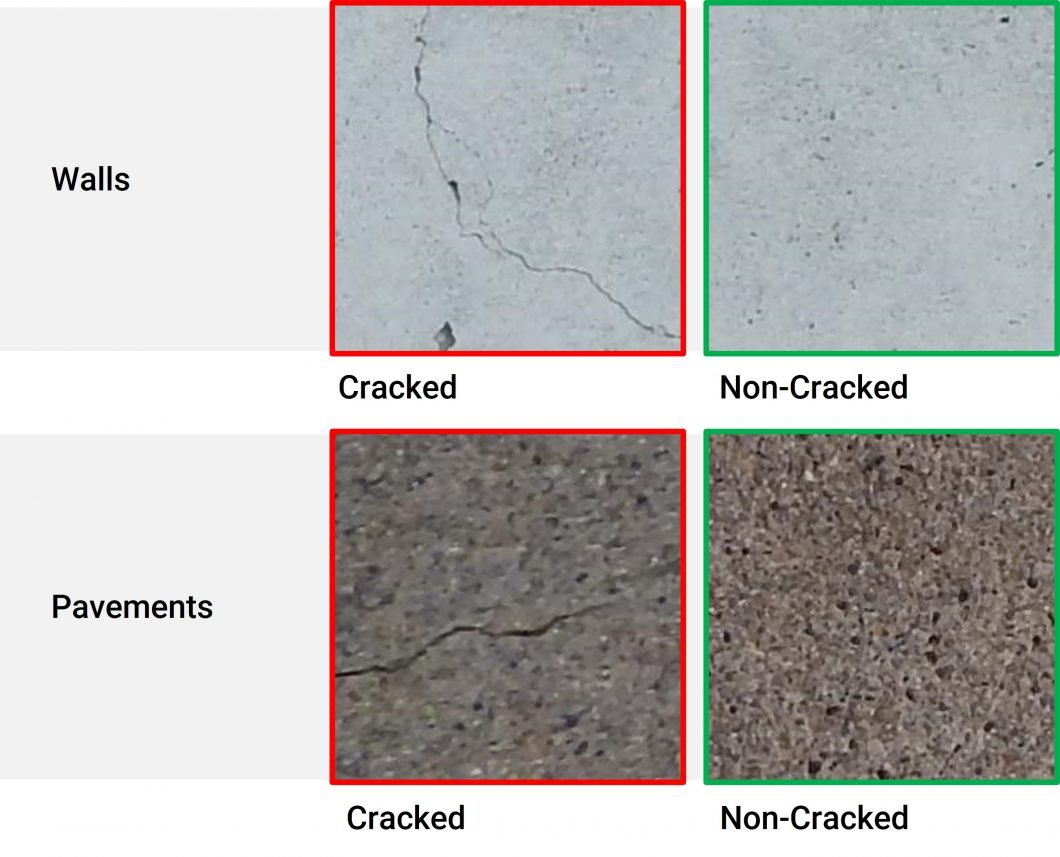

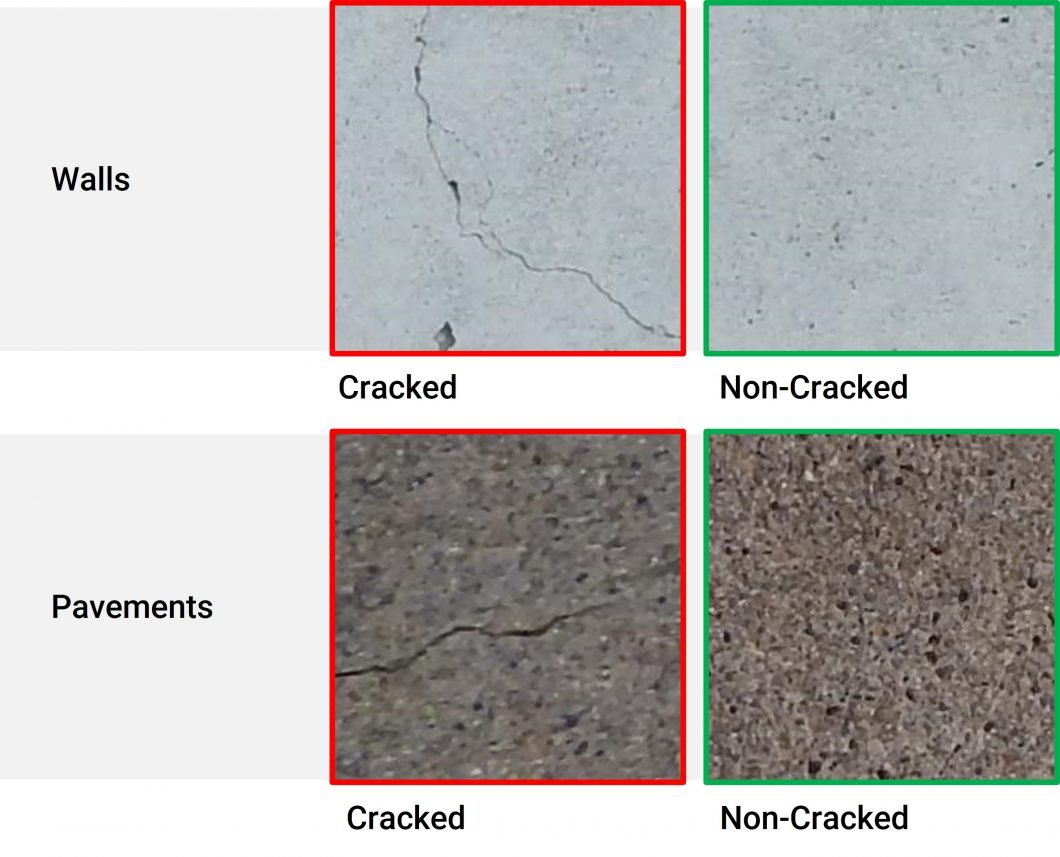

Road Condition Monitoring

Computer vision-based defect detection and condition assessment are developed to monitor concrete and asphalt civil infrastructure. Pavement condition assessment provides information to make more cost-effective and consistent decisions regarding the management of pavement networks.

Generally, pavement distress inspections are performed using sophisticated data collection vehicles and/or foot-on-ground surveys. A Deep Machine Learning Approach to develop an asphalt pavement condition index was developed to provide a human-independent, inexpensive, efficient, and safe way of automated pavement distress detection via Computer Vision.

Applied AI vision for detecting structural defects

Another application of computer vision is the visual inspection of roads to detect road potholes and allocate road maintenance to reduce the number of related vehicle accidents.

Infrastructure Condition Assessment

To ensure civil infrastructure’s safety and serviceability, it is essential to visually inspect and assess its physical and functional condition. Systems for Computer Vision-based civil infrastructure inspection and monitoring automatically convert image and video data into actionable information.

Computer Vision inspection applications are used to identify structural components, characterize local and global visible damage, and detect changes from a reference image. Such monitoring applications include static measurement of strain and displacement and dynamic measurement of displacement for modal analysis.

Driver Attentiveness Detection

Distracted driving detection – such as daydreaming, cell phone usage, and looking at something outside the car – accounts for a large proportion of road traffic fatalities worldwide. Artificial intelligence is used to understand driving behaviors and find solutions to mitigate road traffic incidents.

Road surveillance technologies are used to observe passenger compartment violations, for example, in deep learning-based seat belt detection in road surveillance. In‐vehicle driver monitoring technologies focus on visual sensing, analysis, and feedback.

Driver behavior can be inferred both directly from inward driver‐facing cameras and indirectly from outward scene‐facing cameras or sensors. Techniques based on driver-facing video analytics detect the face and eyes with algorithms for gaze direction, head pose estimation, and facial expression monitoring.

Face detection algorithms have been able to detect attentive vs. inattentive faces. Deep Learning algorithms can detect differences between eyes that are focused and unfocused, as well as signs of driving under the influence.

Multiple vision-based applications for real-time distracted driver posture classification with multiple deep learning methods (RNN and CNN) are used in real-time distraction detection.

Computer Vision Application for Vehicle Counting

Computer Vision in Retail

Customer Tracking

Deep learning algorithms can process the video streams in real time to analyze customer footfall in retail stores. Camera-based methods allow re-using the video stream of common, inexpensive security surveillance cameras. Machine learning algorithms detect people anonymously and contactless to analyze time spent in different areas, waiting times, queueing time, and assess the service quality.

Customer behavior analytics can help to improve retail store layouts, increase customer satisfaction, and objectively quantify key metrics across multiple locations.

Computer Vision generated heatmap to analyze customer behavior and people flow.

People Counting

Computer Vision algorithms are trained with data examples to detect humans and count them as they are detected. Such people counting technology is useful for stores to collect data about their stores’ success and can also be applied in situations regarding COVID-19 where a limited number of people are allowed in a store at once.

Theft Detection

Retailers can detect suspicious behavior, such as loitering or accessing off-limits areas, using computer vision algorithms that autonomously analyze the scene.

Waiting Time Analytics

To prevent impatient customers and endless waiting lines, retailers are implementing queue detection technology. Queue detection uses cameras to track and count the number of shoppers in a line. Once a threshold of customers has been reached, the system sounds an alert for clerks to open new checkouts.

Social Distancing

To ensure safety precautions are being followed, companies are using distance detectors. A camera tracks employee or customer movement and uses depth sensors to assess the distance between them. Then, depending on their position, the system draws a red or green circle around the person. Learn more about Social Distancing Monitoring with deep learning.

Social Distancing Detection with Computer Vision

Computer Vision in Sports

For a comprehensive report, explore our article about computer vision in sports.

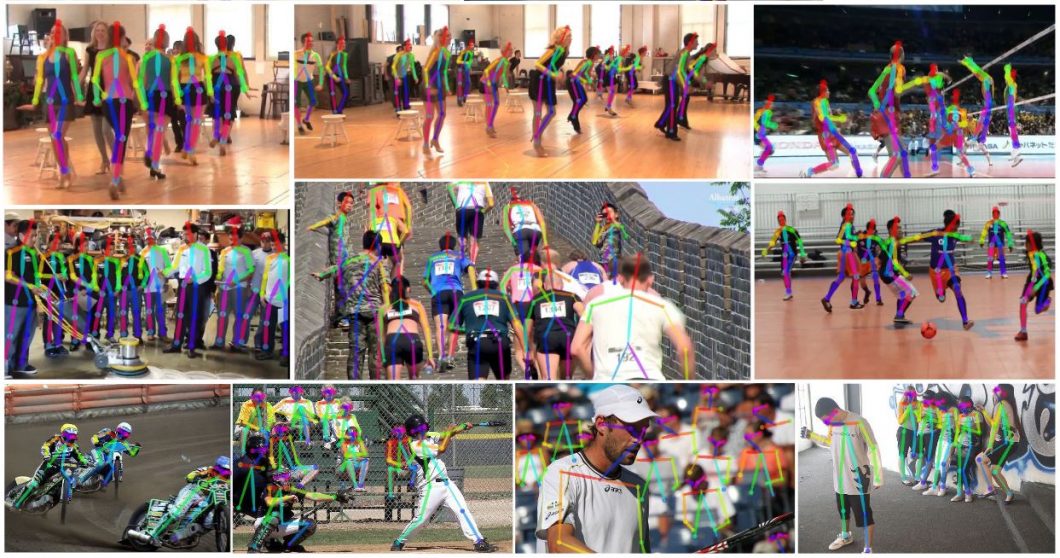

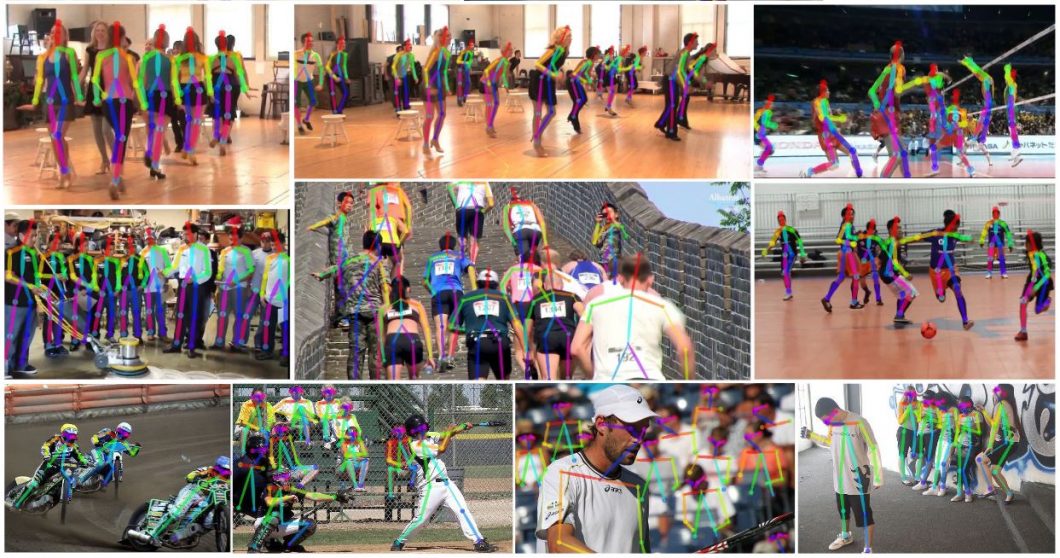

Player Pose Tracking

AI vision can recognize patterns between human body movement and pose over multiple frames in video footage or real-time video streams. For example, human pose estimation has been applied to real-world videos of swimmers where single stationary cameras film above and below the water surface. A popular open-source tool for this is Openpose, useful for real-time keypoint detection.

Keypoint estimation human pose with OpenPose

Those video recordings can be used to quantitatively assess the athletes’ performance without manually annotating the body parts in each video frame. Thus, Convolutional Neural Networks are used to automatically infer the required pose information and detect the swimming style of an athlete.

Markerless Motion Capture

Cameras use pose estimation with deep learning to track the motion of the human skeleton without using traditional optical markers and specialized cameras. This is essential in sports capture, where players cannot be burdened with additional performance capture attire or devices.

Pose estimation with deep learning in Healthcare Applications

Performance Assessment

Automated detection and recognition of sport-specific movements overcome the limitations associated with manual performance analysis methods (subjectivity, quantification, reproducibility). Computer Vision data inputs can be used in combination with the data of body-worn sensors and wearables. Popular use cases are swimming analysis, golf swing analysis, over-ground running analytics, alpine skiing, and the detection and evaluation of cricket bowling.

Soccer ball and player detection in sports with YOLOv7

Multi-Player Pose Tracking

Using Computer Vision algorithms, the human pose and body movement of multiple team players can be calculated from both monocular (single-camera footage), and multi-view (footage of multiple cameras) sports video datasets. The potential use of estimating the 2D or 3D Pose of multiple players in sports is wide-reaching and includes performance analysis, motion capture, and novel applications in broadcast and immersive media.

Stroke Recognition

Computer vision applications are capable of detecting and classifying strokes (for example, classifying strokes in table tennis). Movement recognition or classification involves further interpretations and labeled predictions of the identified instance (for example, differentiating tennis strokes as forehand or backhand).

Stroke recognition aims to provide tools for teachers, coaches, and players to analyze table tennis games and to improve sports skills more efficiently.

Example of Player Tracking With Deep Learning-Based Pose Estimation

Real-Time Coaching

Computer Vision-based sports video analytics help to improve resource efficiency and reduce feedback times for time-constraint tasks. Coaches and athletes involved in time-intensive notational tasks, including post-swim race analysis, can benefit from rapid, objective feedback before the next race in the event program.

Self-training systems for sports exercise is a similar recently emerging computer vision research topic. While self-training is essential in sports exercise, a practitioner may progress to a limited extent without a coach’s instruction.

For example, a yoga self-training application aims to instruct the practitioner to perform yoga poses correctly, assisting in rectifying poor postures and preventing injury. In addition, vision-based self-training systems can be used to give instructions on how to adjust body posture.

Sports Team Analysis

Analysts in professional team sports regularly perform analysis to gain strategic and tactical insights into player and team behavior (identify weaknesses, assess performance, and improve potential). However, manual video analysis is typically time-consuming, where the analysts need to memorize and annotate scenes.

Computer Vision techniques can be used to extract trajectory data from video material and apply movement analysis techniques to derive relevant team sport analytic measures for region, team formation, event, and player analysis (for example, in soccer team sports analysis).

Ball Tracking

Real-time object tracking is used to detect and capture the movement patterns of objects. Ball trajectory data are one of the most fundamental and useful information in evaluating players’ performance and analysis of game strategies. Hence, tracking of ball movement is an application of deep and machine learning to detect and then track the ball in video frames.

For example, Ball tracking is important in sports with large fields (e.g., Football) to help newscasters and analysts interpret and analyze a sports game and tactics faster.

Goal-Line Technology

Camera-based systems can be used to determine if a goal has been scored or not to support the decision-making of referees. Unlike sensors, the AI vision-based method is noninvasive and does not require changes to typical football devices.

Such Goal-Line Technology systems are based on high-speed cameras whose images are used to triangulate the ball’s position. A ball detection algorithm that analyzes candidate ball regions to recognize the ball pattern.

Event Detection in Sports

Deep Learning can be used to detect complex events from unstructured videos, like scoring a goal in a football game, near misses, or other exciting parts of a game that do not result in a score. This technology can be used for real-time event detection in sports broadcasts, applicable to a wide range of field sports.

Highlight Generation

Producing sports highlights is labor-intensive work requiring some degree of specialization, especially in sports with a complex set of rules played for a longer time (e.g., Cricket). An application example is automatic Cricket highlight generation using event-driven and excitement-based features to recognize and clip important events in a cricket match.

Another application is the automatic curation of golf highlights using multimodel excitement features with Computer Vision.

Sports Activity Scoring

Deep Learning methods can be used for sports activity scoring to assess athletes’ action quality (Deep Features for Sports Activity Scoring). For example, automatic sports activity scoring can be used in diving, figure skating, or vaulting (ScoringNet is a 3D model CNN network application for sports activity scoring).

For example, a diving scoring application works by assessing the quality score of a diving performance of an athlete: It matters whether the athlete’s feet are together and their toes are pointed straight throughout the whole diving process.

AI Vision Industry Guides and Applications of Computer Vision Projects

Deep and machine learning technology has been used to create computer vision applications in dozens of ways and for industries of all types. Read our industry guides to find more industry-specific applications and get computer vision ideas from real-world case studies.

-

Guide #1: Computer Vision in Retail

-

Guide #2: Computer Vision In Manufacturing

-

Guide #3: Computer Vision Smart Cities

-

Guide #4: Computer Vision in Agriculture and Smart Farming

-

Guide #5: Computer Vision in the Education Sector

-

Guide #6: Computer Vision Smart Cities

-

Guide #7: Computer Vision In Healthcare

-

Guide #8: Computer Vision in Oil and Gas

-

Guide #9: Computer Vision in Automotive

-

Guide #10: Computer Vision in Insurance

-

Guide #11: Computer Vision in Sports

-

Guide #12: Computer Vision in Aviation

-

Guide #13: Computer Vision in Construction

-

Guide #14: Computer Vision Companies and Startups

Get Started With Enterprise Computer AI Vision Algorithms and Applications

At ProX PC, we power the most complete end-to-end computer vision platform. Industry leaders, Fortune 100, and governmental organizations develop and deploy their computer vision applications with software infrastructure.

ProX PC provides full-scale features to rapidly build, deploy, and scale enterprise-grade computer vision applications. ProX PC helps to overcome integration hassles, privacy, security, and scalability challenges – without writing code from scratch.

-

Use our end-to-end solution to build, deploy, and scale enterprise computer vision systems.

-

Choose from a variety of computer vision tasks to solve your business challenge

-

Deliver computer vision 10x faster with visual programming

-

Use the video input of any conventional camera (surveillance cameras, CCTV, USB, etc.).

We provide all the computer vision services and AI vision experience you’ll need. Get in touch with our team of AI experts and schedule a demo to see the key features.

Related Products

ProX MicroEdge Orin Nano

-

Compact AI accelerator with 6-core Arm® Cortex® CPU and 1024/512-core NVIDIA Ampere GPU with Tensor Cores

-

8GB/4GB of high-speed LPDDR5 memory and NVMe SSD

-

Dual GbE ports, Wi-Fi options, and 4G/5G support

-

Versatile I/O and robust features

-

Ideal for data-intensive tasks and AI innovation

ProX MicroEdge Orin NX

-

Compact powerhouse that combines an 8/6-core Arm® Cortex® CPU, a 1024-core NVIDIA Ampere GPU with 32 Tensor Cores, and lightning-fast 128-bit LPDDR5 memory.

-

Store and retrieve data seamlessly with an NVMe SSD and Micro SD slot.

-

Stay connected with dual GbE ports, Wi-Fi options, and 4G/5G support.

-

Versatile I/O options, including USB 3.1 and HDMI, make interfacing a breeze.

-

Unlock the future of AI innovation with Jetson Orin NX.