For Professionals, By Professionals

Discover ProX PC for best custom-built PCs, powerful workstations, and GPU servers in India. Perfect for creators, professionals, and businesses. Shop now!

SERVICES

WE ACCEPT

Contents

In many computer vision applications (e.g. object tracking and medical imaging) there is a need to align two or more images of the same object (or scene) taken from different perspectives, at different times, or in different conditions. Image registration algorithms transform a given image (a reference image) into another image (target image) so that they are geometrically aligned. This adjustment is required in multiple applications, such as image fusion, stereo vision, object tracking, and medical image analysis.

About us: ProX PC is the end-to-end intelligent solution for enterprises. With ProX PC, ML teams can drastically reduce the time to production of their computer vision applications. To learn more, book a demo for your company.

It utilizes a 3-dimensional transformation of the photos in the set related to the image chosen as a reference.

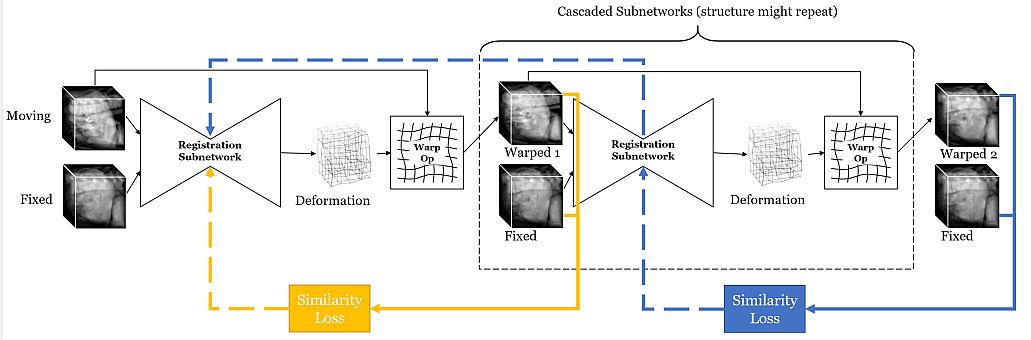

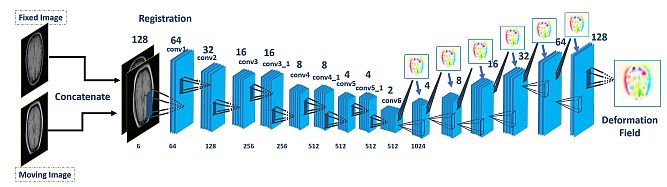

Volume Tweening Network (VTN) for 3D moving image registration. Each subnetwork is responsible for finding the deformation field between the fixed image and the moving image

Image registration is frequently used to align the image from diverse camera sources in medical and satellite photography. It can be realized in two ways:

Image to Map Registration: the input image is displaced to match the map information of a base image while keeping its original spatial resolution.

Image registration methods can be classified into two groups: area-based and feature-based methods. Area-based approaches are preferred when images are missing important features and distinguishing information is given by shaded colors rather than clear forms and structures.

Image alignment is the first step in image registration and it is done in 4 steps:

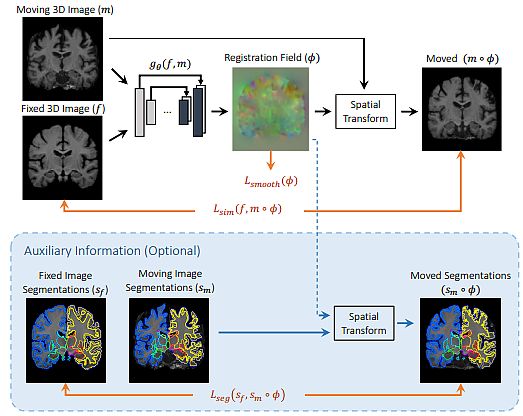

Image Registration with Registration Field and Spatial Transform

Computer Vision Techniques for Image Registration

Here we present common techniques for image registration and their advantages/drawbacks:

Pixel-Based Method

This method applies a cross-correlation statistical methodology for image registration. It is based on pattern matching, which finds the location and orientation of a template or pattern in an image. Cross-correlation is a measure of similarity or a match metric.

The 2-dimensional cross-correlation function calculates the similarity of each translation between the reference and the checked image. If the template fits the image, the cross-correlation will be at its top.

The main drawbacks of the correlation approach are the high processing complexity and the flat similarity maximum (due to the self-similarity of the pictures). The method can be improved by pre-processing or applying edge or vector correlation.

Contour-Based Image Registration

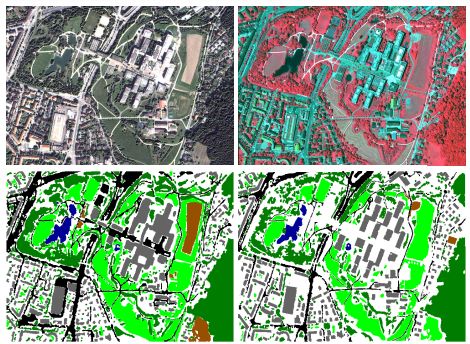

This method uses strong statistical characteristics to match picture feature points. Color image segmentation is used to extract regions of interest from images.

To produce the contour of an image – the mean for a given set of colors is computed. During the segmentation process, each RGB pixel in an image is categorized as having a color in a specific range or not. In addition, the Euclidean distance is applied to determine similarity.

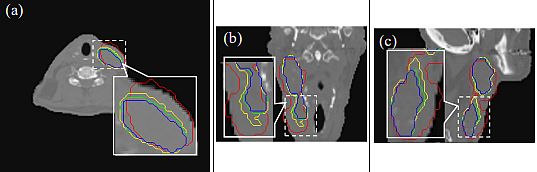

Contour-based image registration from multiple CT scans (contours marked manually)

These two sets are coded as binary images (black and white). A Gaussian filter is used to eliminate noise since thresholds blur the image. Then the contour of the image is obtained. The accuracy of the contour method is satisfactory, but a drawback is that it is manual and slow.

Point-Mapping Method

This is the most common method for registering two images with unknown misalignment. It utilizes image features produced from a feature extraction algorithm/process. The fundamental goal of feature extraction is to filter out redundant information.

Features that are present in both images and are more tolerant of local distortions are chosen. After detecting characteristics in each image, they should be matched.

Point Mapping (Multimodal) Image Registration

Control points for point matching are crucial in this strategy. Examples of control points are corners, points of locally greatest curvature, contour lines, lines of intersection, centers of frames with locally maximum curvature, and centers of gravity of closed-boundary areas.

The limitation of the feature-based method is the borderline of the frame content. The registration characteristics should be recognized in border areas of the image. Frames may lack this feature, and their selection is usually not based on their content evaluation.

Feature-Based Registration

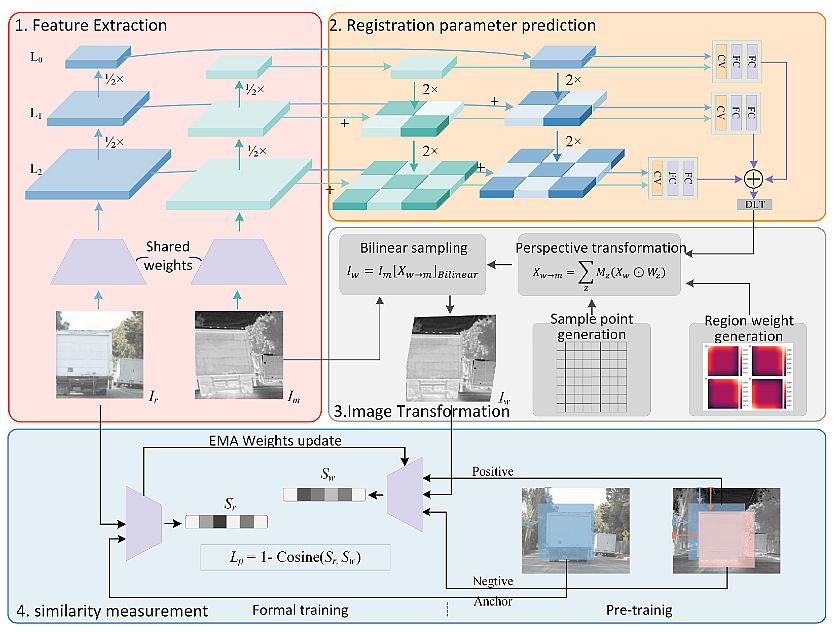

The feature-based matching method can be used when image intensities provide more local structural information. Image characteristics produced from the feature extraction technique can be used for registration. They detect and match key features (such as corners, edges, or interest points) between images. Then, transformation parameters are computed based on these features.

Image Registration done by feature extraction, image transformation, and similarity measurement

This method can handle changes in scale, translation, and rotation, but it could fail in cases of large deformations or occlusions.

Advanced Image Registration Methods

Deep Learning FlowNet architecture

Applications of Image Registration

Image Fusion

Image fusion’s task is to combine 2 or more registered images and produce a new image, which is more understandable than the originals. It is quite significant in medical imaging since it creates more acceptable images for human visual perception. A simple image fusion technique is to take the average of two input images, but it leads to a feature contrast reduction.

A better approach is to apply a Laplacian pyramid-based image fusion but it will introduce blocking artifacts cost. Best fusion output images can be achieved based on the Wavelet Transform for each of the source images.

Object Tracking

The object tracking algorithm follows the movement of an object and tries to estimate (predict) its position in a video. An example of such an algorithm is the centroid tracker. It stores the last known bounding boxes, then has a new set of bounding boxes, and then minimizes the maximum distance between objects that match.

To transform images of the same scene generated by different sensors, object tracking requires heterogeneous images that are correctly registered in advance, with cross-modal image registration. Recent deep learning technology utilizes neural networks with large parameter scales to predict feature points.

![]()

Multiple Object Tracking (MOT) vs. General Object Detection

Medical Imagery

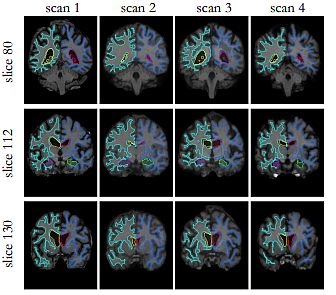

Medical Image Registration tries to find an optimal spatial transformation that best aligns with the existing anatomical structures. It is used in many clinical applications such as image reconstruction, image guidance, motion tracking, segmentation, dose accumulation, etc. Medical image registration is a broad topic and can be considered from different points of view.

From an input image perspective, registration methods can be divided into unimodal, multimodal, interpatient, and intra-patient registration. The deformation model point of view allows for registration methods to be divided into rigid, affine, and deformable methods. From a region of interest (ROI) perspective, registration methods can be grouped according to anatomical sites, such as brain, lung registration, etc.

Image Registration by Multiple MRI Brain Scans with affine transformation alignment

Limitations of Image Registration

Image registration has certain limitations, such as:

Illumination (Viewpoint) Changes: Registration methods could cope when images have significant changes in lighting conditions or viewpoints.

Summary

Image registration is an important technique for the integration, fusion, and evaluation of data from multiple sources (sensors). It has many applications in computer vision, medical imaging, and remote sensing.

Image registrations with complicated nonlinear distortions, multi-modal registration, and registrations of occluded images, contribute to the robustness of the computer vision methods applied in the hardest use cases.

For more info visit www.proxpc.com

Related Products

Share this: