For Professionals, By Professionals

Discover ProX PC for best custom-built PCs, powerful workstations, and GPU servers in India. Perfect for creators, professionals, and businesses. Shop now!

SERVICES

WE ACCEPT

Llama 3 is a powerful AI model that requires high-performance hardware to function efficiently. To run Llama 3 smoothly, you need a powerful CPU, a sufficient RAM, and a GPU with enough VRAM. Proper hardware selection ensures better performance, faster inference, and efficient training. Below are the key hardware requirements you should consider before setting up a system for Llama 3.

A strong CPU is essential for handling various computational tasks and managing data flow to the GPU. While Llama 3 is GPU-intensive, the CPU plays an important role in pre-processing and parallel operations.

Minimum CPU Requirement: AMD Ryzen 7 or Intel Core i7 (12th Gen or newer)

Recommended CPU: AMD Ryzen 9 or Intel Core i9 (13th Gen or newer)

High-End Option: AMD Threadripper or Intel Xeon for large-scale AI applications

A higher core count helps improve efficiency, especially for training large models. Multi-threading capability also plays a role in optimizing workloads.

RAM is crucial for storing temporary data and ensuring smooth execution. Llama 3 needs a large amount of RAM to handle multiple tasks and large datasets effectively.

Minimum RAM: 32GB DDR5

Recommended RAM: 64GB DDR5

For Large-Scale Use: 128GB+ DDR5

Faster RAM speeds (e.g., DDR5-5200MHz or higher) help reduce latency, improving data access and processing times.

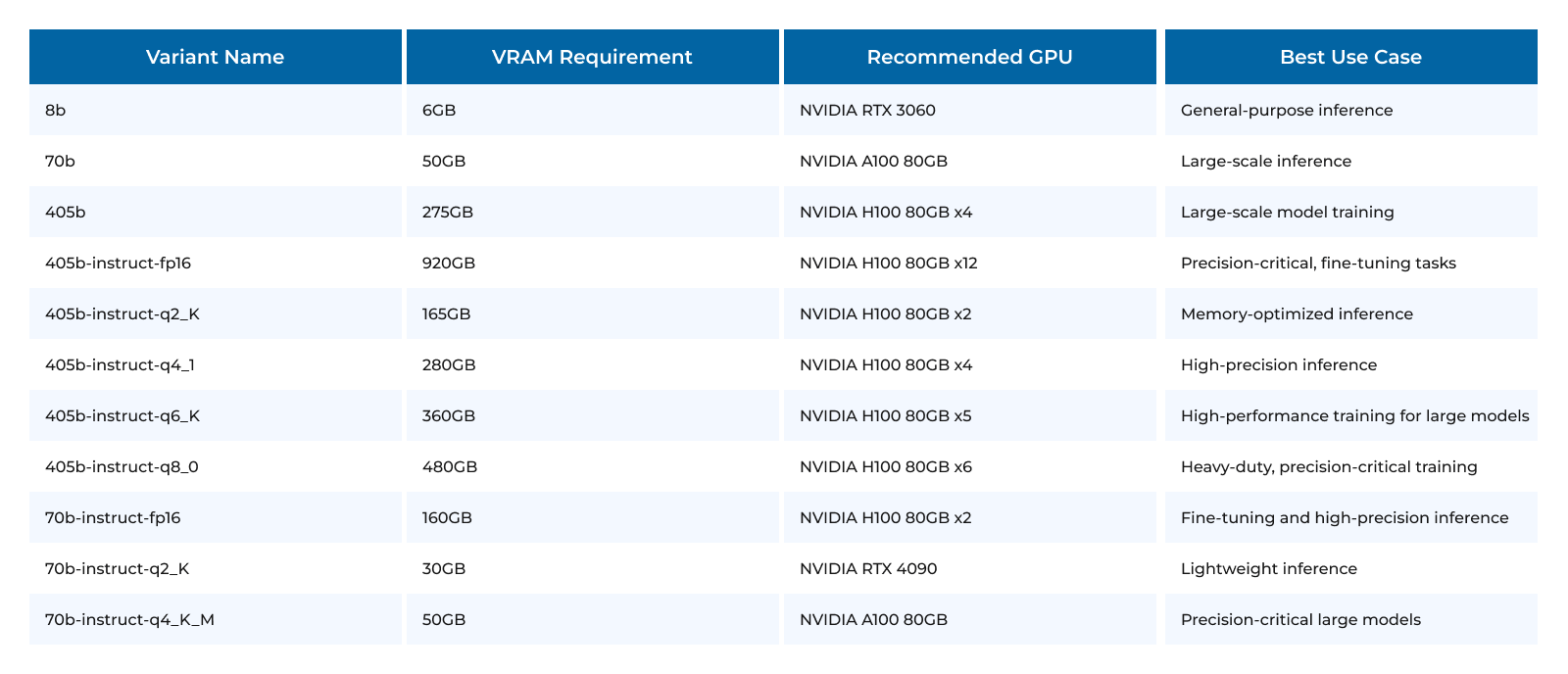

Llama 3 is heavily dependent on the GPU for training and inference. The amount of VRAM (video memory) plays a significant role in determining how well the model runs.

Minimum GPU VRAM: 24GB (e.g., NVIDIA RTX 3090, RTX 4090, or equivalent)

Recommended VRAM: 48GB (e.g., NVIDIA RTX 6000 Ada, RTX A6000, AMD Radeon Pro W7900)

For Large Models and Training: 80GB+ (e.g., NVIDIA H100, A100, or AMD MI300)

For multi-GPU setups, NVLink or PCIe interconnects can be used for better communication between GPUs.

Llama 4 is expected to be more powerful and demanding than Llama 3. It may require even better hardware to run efficiently.

Expected CPU Requirement: AMD Ryzen 9 7950X or Intel Core i9 14900K

Expected RAM Requirement: 128GB DDR5 or higher

Expected GPU Requirement: 80GB VRAM minimum (e.g., NVIDIA H200, AMD MI400)

If you plan to upgrade to Llama 4 in the future, ensure your hardware is future-proof by investing in high-end components.

Choosing the right hardware depends on your specific use case. Here are some factors to consider:

High-end GPUs and CPUs can be expensive. Choose based on your workload and budget.

If cost is a concern, consider cloud-based AI solutions to avoid hardware costs.

Inference Only: A single high-end GPU with 48GB+ VRAM may be sufficient.

Training & Fine-Tuning: Requires multiple GPUs and at least 128GB RAM.

AI workloads are power-intensive. Ensure your system has a high-wattage power supply (1000W+).

Liquid cooling is recommended for high-end GPUs and CPUs to prevent overheating.

Consider a motherboard with multiple PCIe slots for future GPU expansion.

To run Llama 3, 4 efficiently in 2025, you need a powerful CPU, at least 64GB RAM, and a GPU with 48GB+ VRAM. For large-scale AI applications, a multi-GPU setup with 80GB+ VRAM per GPU is ideal. If you plan to upgrade to Llama 4, investing in high-end hardware now will save costs in the future. Make sure to consider budget, workload, cooling, and expandability when choosing your hardware.

By selecting the right components, you can maximize performance and efficiency when working with Llama 3, 4 and future AI models.

Explore custom workstations at proxpc.com

Share this: