- FPGAs, or Field Programmable Gate Arrays, play a pivotal role in accelerating Artificial Intelligence tasks. These versatile chips can be programmed and reprogrammed to execute specific algorithms, making them highly adaptable to various AI applications. FPGA's unique architecture allows for parallel processing, which significantly speeds up computations compared to traditional CPU-based systems. In this article, we delve into the intricacies of FPGA technology and its impact on AI acceleration.

- To comprehend the significance of FPGAs in AI acceleration, it's essential to grasp the fundamentals of both FPGA architecture and AI algorithms. FPGAs consist of an array of configurable logic blocks interconnected through programmable routing resources. This structure enables the implementation of complex algorithms directly onto the hardware, bypassing the need for software interpretation, and leading to faster execution.

- In contrast, AI algorithms, particularly deep learning models, involve intricate mathematical computations performed on large datasets. These computations are typically executed using matrix multiplication operations, which are inherently parallelizable. However, traditional CPUs are not optimized for such parallel tasks, leading to suboptimal performance and longer processing times.

- Here's where FPGAs shine: their parallel processing capabilities align perfectly with the requirements of AI algorithms. By harnessing the parallelism inherent in AI tasks, FPGAs can execute multiple computations simultaneously, drastically reducing processing times. Moreover, FPGAs can be customized to match the specific requirements of a given AI model, further enhancing performance and efficiency.

- One of the key advantages of FPGA-based AI acceleration is its flexibility. Unlike fixed-function ASICs (Application-Specific Integrated Circuits) designed for specific tasks, FPGAs can be reconfigured on the fly to adapt to changing AI workloads. This adaptability is particularly beneficial in scenarios where AI models evolve rapidly, such as in research environments or industries like autonomous vehicles and healthcare.

- Furthermore, FPGAs offer superior power efficiency compared to GPUs (Graphics Processing Units), which are commonly used for AI acceleration. While GPUs excel at parallel processing, they consume significantly more power, limiting their suitability for edge computing and other power-constrained applications. FPGAs, with their ability to achieve similar or even better performance with lower power consumption, emerge as an attractive alternative.

- Another aspect worth highlighting is the ease of deployment offered by FPGAs. Unlike ASICs, which require costly and time-consuming fabrication processes, FPGAs are readily available off-the-shelf and can be easily integrated into existing hardware systems. This accessibility makes FPGA-based AI acceleration accessible to a broader range of users, from small startups to large enterprises.

- Moreover, FPGAs facilitate rapid prototyping and experimentation in AI research and development. Researchers can quickly iterate on their algorithms by deploying them onto FPGAs, allowing for faster validation and optimization cycles. This agility is invaluable in driving innovation and pushing the boundaries of AI technology.

- However, despite their numerous advantages, FPGAs also pose certain challenges in AI acceleration. One such challenge is the programming complexity associated with designing FPGA-based solutions. Unlike programming for CPUs or GPUs, which rely on high-level languages like Python and CUDA, FPGA programming often requires expertise in hardware description languages (HDLs) such as Verilog or VHDL.

- Additionally, optimizing algorithms for FPGA implementation requires a deep understanding of both the algorithm itself and the underlying FPGA architecture. Achieving peak performance on FPGAs often involves manual tuning and optimization, which can be time-consuming and resource-intensive.

- Furthermore, while FPGAs offer flexibility and reconfigurability, they may not always match the performance of specialized ASICs designed specifically for AI acceleration. ASICs, being tailored to a particular task, can achieve higher levels of efficiency and performance but lack the versatility of FPGAs.

- Despite these challenges, the role of FPGAs in AI acceleration continues to grow, driven by their unique combination of flexibility, performance, and power efficiency. As AI workloads become increasingly diverse and demanding, FPGAs are poised to play a crucial role in meeting these challenges and unlocking new possibilities in artificial intelligence.

In conclusion,

FPGAs are indispensable for accelerating AI tasks, thanks to their adaptability and parallel processing capabilities. Despite challenges in programming complexity, FPGAs offer superior power efficiency and flexibility compared to traditional CPUs and GPUs. While specialized ASICs may outperform FPGAs in specific tasks, their lack of versatility limits their applicability. FPGAs enable rapid prototyping and experimentation, driving innovation in AI research and development. As AI workloads evolve, FPGAs will continue to play a pivotal role in meeting the demands of diverse applications, shaping the future of artificial intelligence acceleration.

For more info visit www.proxpc.com

Edge Computing Products

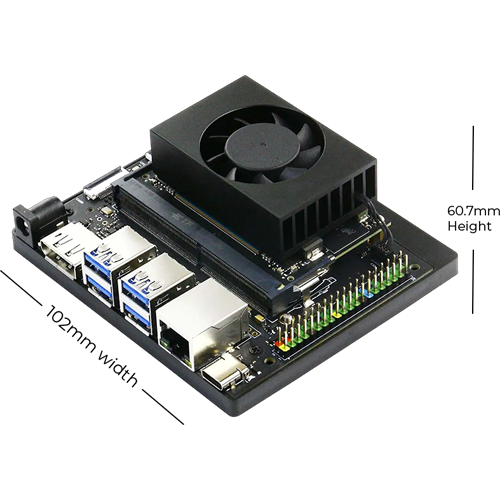

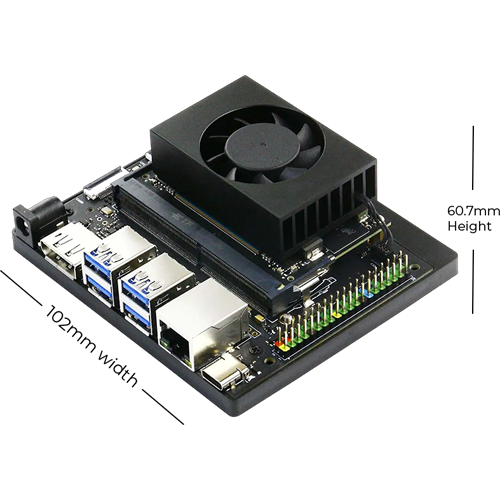

ProX Micro Edge Orin Developer Kit

Learn More

ProX Micro Edge Orin NX

Learn More

ProX Micro Edge Orin Nano

Learn More

ProX Micro Edge AGX Orin

Learn More