For Professionals, By Professionals

Discover ProX PC for best custom-built PCs, powerful workstations, and GPU servers in India. Perfect for creators, professionals, and businesses. Shop now!

SERVICES

WE ACCEPT

Contents

Edge Intelligence or Edge AI moves AI computing from the cloud to edge devices, where data is generated. This is a key to building distributed and scalable AI systems in resource-intensive applications such as Computer Vision. Explore ProX micro edge devices.

In this article, we discuss the following topics:

1. What is Edge Computing, and why do we need it?

2. What is Edge Intelligence or Edge Al?

3. Moving Deep Learning Applications to the Edge

4. On-Device Al and Inference at the Edge

5. Edge Intelligence enables Al democratization

Edge Computing Trends

With the breakthroughs in deep learning, recent years have witnessed a booming of artificial intelligence (AI) applications and services. Driven by the rapid advances in mobile computing and the Artificial Intelligence of Things (AIoT), billions of mobile and IoT devices are connected to the Internet, generating zillions of bytes of data at the network edge.

Accelerated by the success of AI and IoT technologies, there is an urgent need to push the AI frontiers to the network edge to fully unleash the potential of big data. To realize this trend, Edge Computing is a promising concept to support computation-intensive AI applications on edge devices.

Edge Intelligence or Edge AI is a combination of AI and Edge Computing; it enables the deployment of machine learning algorithms to the edge device where the data is generated. Edge Intelligence has the potential to provide artificial intelligence for every person and every organization at any place.

What is Edge Computing

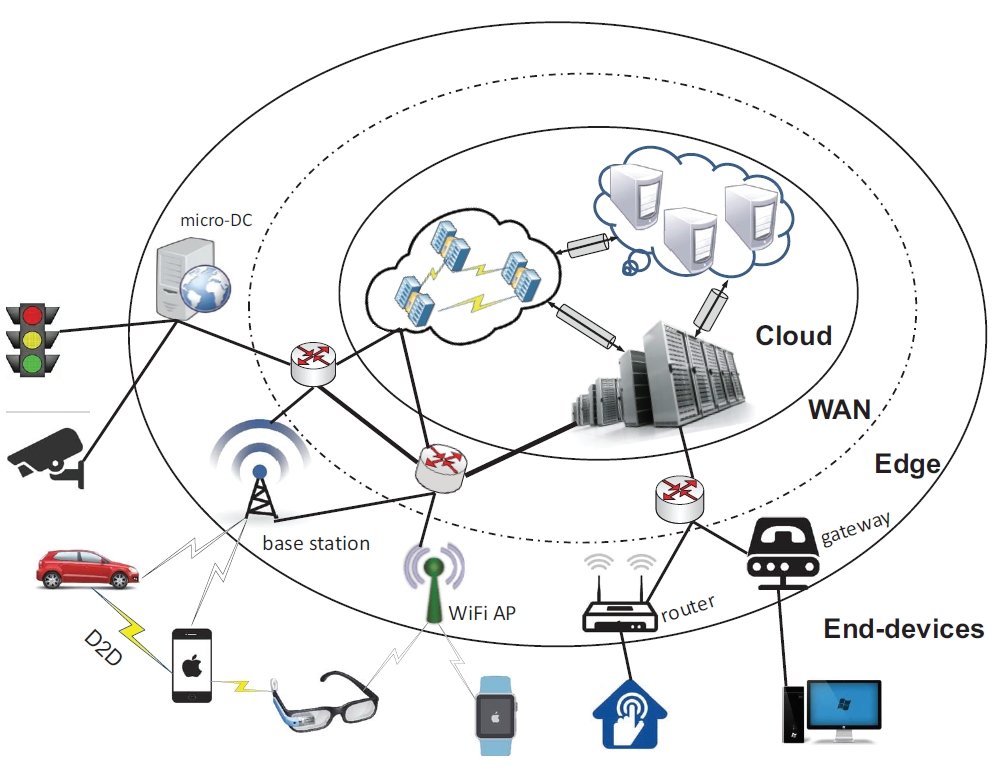

Edge Computing is the concept of capturing, storing, processing, and analyzing data closer to the location where it is needed to improve response times and save bandwidth. Hence, edge computing is a distributed computing framework that brings applications closer to data sources such as IoT devices, local end devices, or edge servers.

The rationale of edge computing is that computing should happen in the proximity of data sources. Therefore, we envision that edge computing could have as big an impact on our society as we have witnessed with cloud computing.

Concept of Edge Computing

Why We Need Edge Intelligence

Data Is Generated At the Network Edge

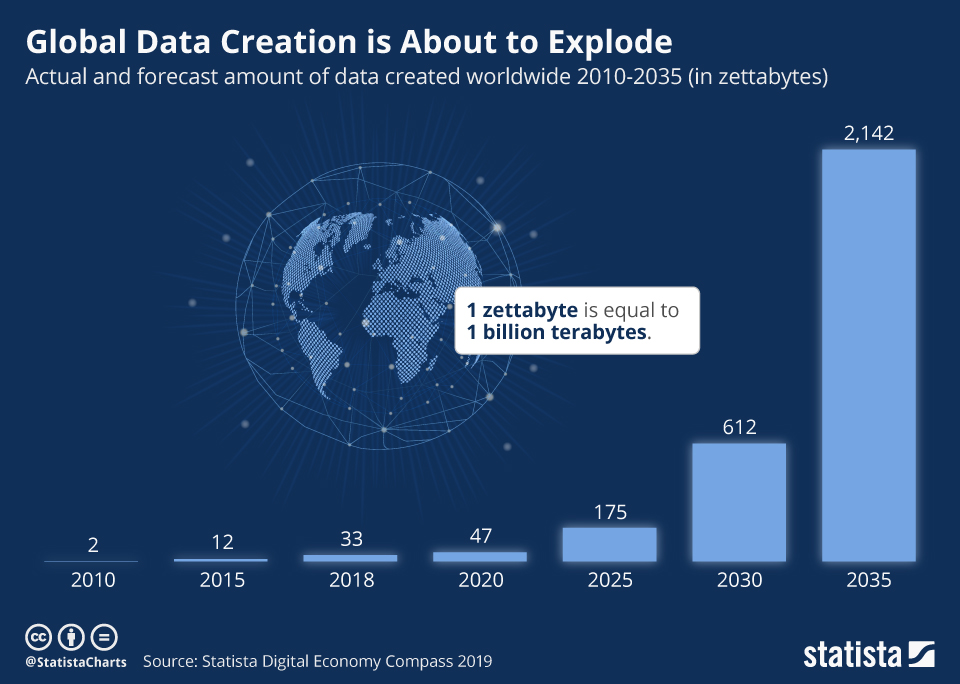

As a key driver that boosts AI development, big data has recently gone through a radical shift of data sources from mega-scale cloud data centers to increasingly widespread end devices, such as mobile, edge, and IoT devices. Traditionally, big data, such as online shopping records, social media contents, and business informatics, were mainly born and stored at mega-scale data centers. However, with the emergence of mobile computing and IoT, the trend is reversing now.

Today, large numbers of sensors and smart devices generate massive amounts of data, and ever-increasing computing power is driving the core of computations and services from the cloud to the edge of the network. Today, over 50 billion IoT devices are connected to the Internet and the IDC forecasts that, by 2025, 80 billion IoT devices and sensors will be online.

Global data creation is about to grow even faster.

Cisco’s Global Cloud Index estimates that nearly 850 Zettabytes (ZB) of data collected will be generated each year outside the cloud by 2021, while global data center traffic was projected to be only 20.6 ZB. This indicates that the sources of data are transforming – from large-scale cloud data centers to an increasingly wide range of edge devices. Meanwhile, cloud computing is gradually unable to manage these massively distributed computing power and analyze their data:

Edge Computing Offers Data Processing At the Data Source

Edge Computing is a paradigm to push cloud services from the network core to the network edges. The goal of Edge Computing is to host computation tasks as close as possible to the data sources and end-users.

Certainly, edge computing and cloud computing are not mutually exclusive. Instead, the edge complements and extends the cloud. The main advantages of combining edge computing with cloud computing are the following:

Data is increasingly produced at the edge of the network, and it would be more efficient to also process the data at the edge of the network. Hence, edge computing is an important solution to break the bottleneck of emerging technologies based on its advantages of reducing data transmission, improving service latency, and easing cloud computing pressure.

Edge Intelligence Combines AI and Edge Computing

Data Generated At the Edge Needs AI

The skyrocketing numbers and types of mobile and IoT devices lead to the generation of massive amounts of multi-modal data (audio, pictures, video) of the device’s physical surroundings that are continuously sensed.

AI is functionally necessary due to its ability to quickly analyze huge data volumes and extract insights from them for high-quality decision-making. Gartner forecasted that in the near future, more than 80% of enterprise IoT projects will include an AI component.

One of the most popular AI techniques, deep learning, brings the ability to identify patterns and detect anomalies in the data sensed by the edge device, for example, population distribution, traffic flow, humidity, temperature, pressure, and air quality.

The insights extracted from the sensed data are then fed to the real-time predictive decision-making applications (e.g., safety and security, automation, traffic control, inspection) in response to the fast-changing environments, increasing operational efficiency.

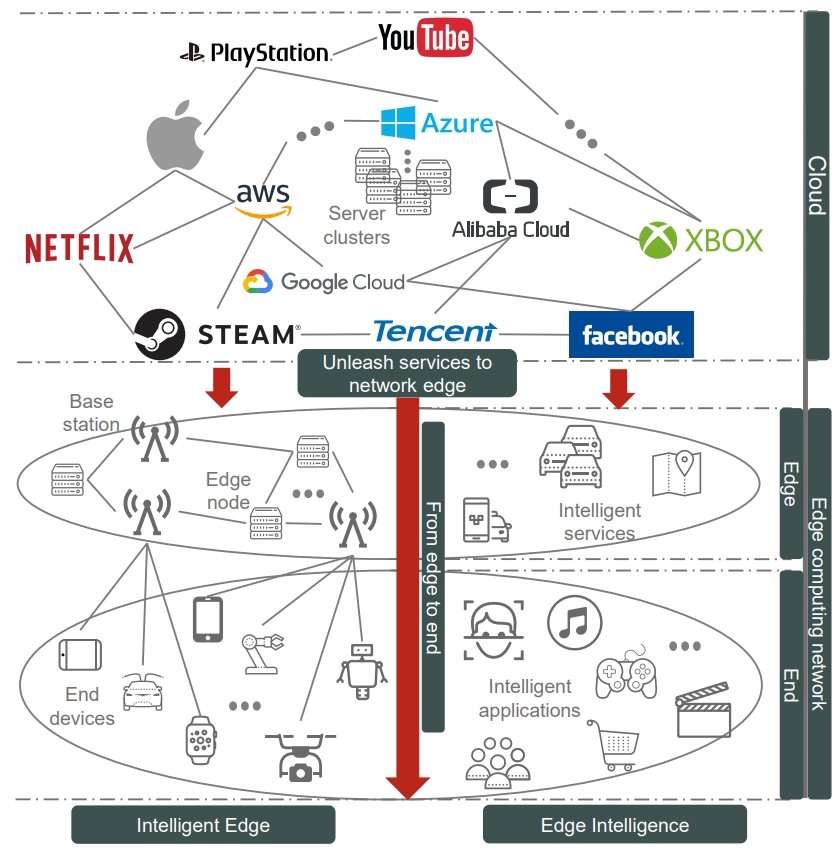

What is Edge Intelligence and Edge ML

The combination of Edge Computing and AI has given rise to a new research area named “Edge Intelligence” or “Edge ML”. Edge Intelligence makes use of the widespread edge resources to power AI applications without entirely relying on the cloud. While the term Edge AI or Edge Intelligence is brand new, practices in this direction have begun early, with Microsoft building an edge-based prototype to support mobile voice command recognition in 2009.

However, despite the early beginning of exploration, there is still no formal definition for edge intelligence. Currently, most organizations and presses refer to Edge Intelligence as “the paradigm of running AI algorithms locally on an end device, with data (sensor data or signals) that are created on the device.”

Edge ML and Edge Intelligence are widely regarded areas for research and commercial innovation. Due to the superiority and necessity of running AI applications on the edge, Edge AI has recently received great attention.

The Gartner Hype Cycles names Edge Intelligence as an emerging technology that will reach a plateau of productivity in the following 5 to 10 years. Multiple major enterprises and technology leaders, including Google, Microsoft, IBM, and Intel, demonstrated the advantages of edge computing in bridging the last mile of AI. These efforts include a wide range of AI applications, such as real-time video analytics, cognitive assistance, precision agriculture, smart city, smart home, and industrial IoT.

Concept of Edge Intelligence and Intelligent Edge

Cloud Is Not Enough to Power Deep Learning Applications

Artificial Intelligence and deep learning-based intelligent services and applications have changed many aspects of people’s lives due to the great advantages of deep learning in the fields of Computer Vision (CV) and Natural Language Processing (NLP).

However, due to efficiency and latency issues, the current cloud computing service architecture is not enough to provide artificial intelligence for every person and every organization at any place.

For a wider range of application scenarios, such as smart factories and cities, face recognition, medical imaging, etc., there are only a limited number of intelligent services offered due to the following factors:

Since the edge is closer to users than the cloud, edge computing is expected to solve many of these issues.

Advantages of Moving Deep Learning to the Edge

The fusion of AI and edge computing is natural since there is a clear intersection between them. Data generated at the network edge depends on AI to fully unlock its full potential. And edge computing is able to prosper with richer data and application scenarios.

Edge intelligence is expected to push deep learning computations from the cloud to the edge as much as possible. This enables the development of various distributed, low-latency, and reliable, intelligent services.

The advantages of deploying deep learning to the edge include:

Unleashing deep learning services using resources at the network edge, near the data sources, has emerged as a desirable solution. Therefore, edge intelligence aims to facilitate the deployment of deep learning services using edge computing.

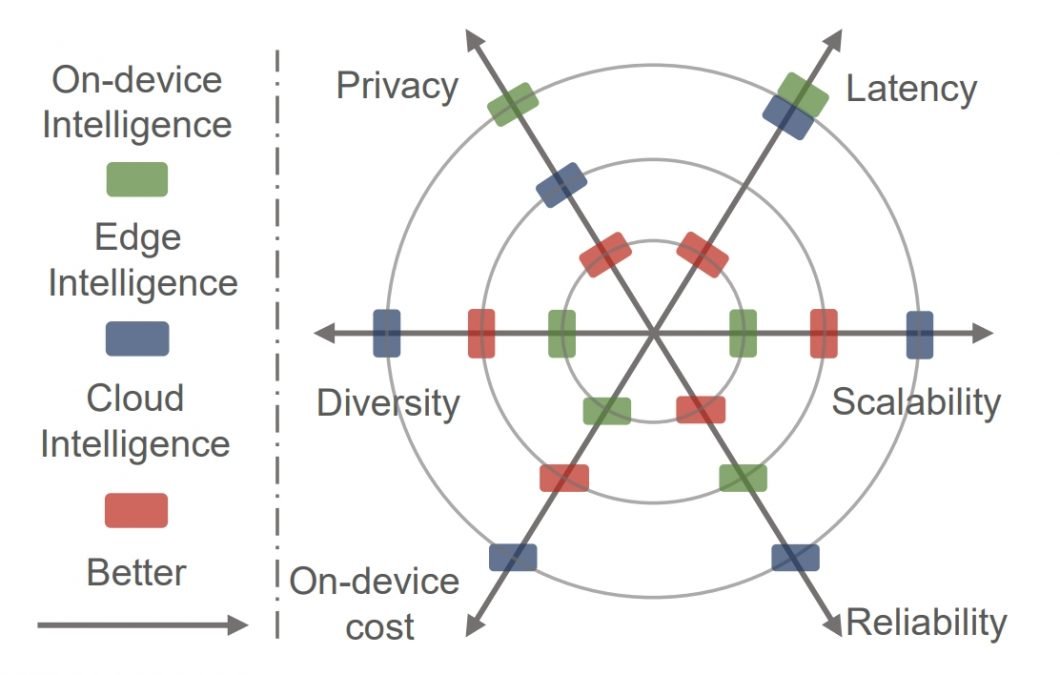

Capabilities comparison of cloud, on-device, and edge intelligence

Edge Computing Is the Key Infrastructure for AI Democratization

AI technologies have witnessed great success in many digital products or services in our daily life (e-commerce, service recommendations, video surveillance, smart home devices, etc.). Also, AI is a key driving force behind emerging innovative frontiers, such as self-driving cars, intelligent finance, cancer diagnosis, smart city, intelligent transportation, and medical discovery.

Based on those examples, leaders in AI push to enable a richer set of deep learning applications and push the boundaries of what is possible. Hence, AI democratization or ubiquitous AI is a goal declared by major IT companies, with the vision of “making AI for every person and every organization at everywhere.”

Therefore, AI should move “closer” to the people, data, and end devices. Obviously, edge computing is more competent than cloud computing in achieving this goal:

Due to these advantages, edge computing is naturally a key enabler for ubiquitous AI.

Multi-Access Edge Computing (MEC)

What is Multi-Access Edge Computing?

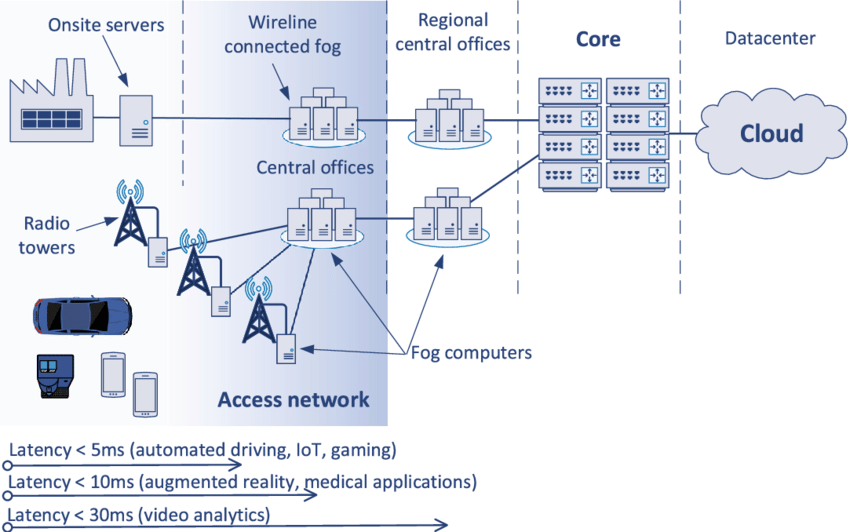

Multi-access Edge Computing (MEC), also known as Mobile Edge Computing, is a key technology that enables mobile network operators to leverage edge-cloud benefits using their 5G networks.

Following the concept of edge computing, MEC is located in close proximity to the connected devices and end-users and enables extremely low latency and high bandwidth while always enabling applications to leverage cloud capabilities as necessary.

MEC to leverage 5G and AI

In recent years, the MEC paradigm has attracted great interest from both academia and industry researchers. As the world becomes more connected, 5G promises significant advances in computing, storage, and network performance in different use cases. This is how 5G, in combination with AI, has the potential to power large-scale AI applications, for example, in agriculture or logistics.

The new generation of AI applications produces a huge amount of data and requires a variety of services, accelerating the need for extreme network capabilities in terms of high bandwidth, ultra-low latency, and resource consumption for compute-intensive tasks such as computer vision.

Hence, telecommunication providers are progressively trending toward Multi-access Edge Computing (MEC) technology to improve the provided services and significantly increase cost-efficiency. As a result, telecommunication and IT ecosystems, including infrastructure and service providers, are in full technological transformation.

How does Multi-Access Edge Computing work?

MEC consists in moving the different resources from distant centralized cloud infrastructure to edge infrastructure closer to where the data is produced. Instead of offloading all the data to be computed in the cloud, edge networks act as mini datacenters that analyze, process, and store the data.

As a result, MEC reduces latency and facilitates high-bandwidth applications with real-time performance. This makes it possible to implement Edge-to-Cloud systems without the need to install physical edge devices and servers.

The concept of Multi-access Edge Computing

Computer Vision and MEC

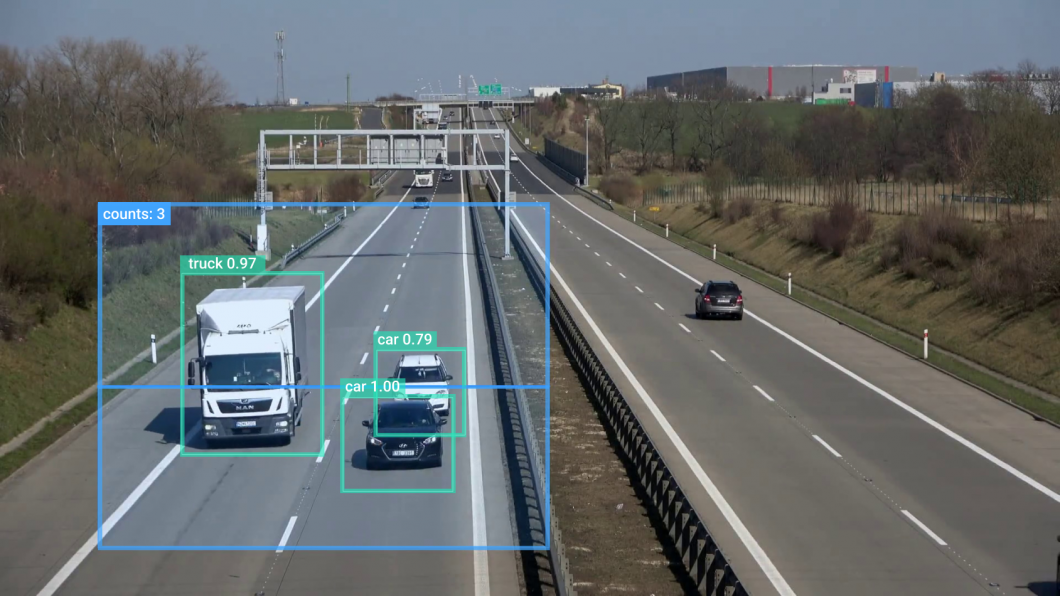

Combining state-of-the-art computer vision algorithms such as Deep Learning algorithms and MEC provides new advantages for large-scale, onsite visual computing applications. In Edge AI use cases, MEC leverages virtualization to replace physical edge devices and servers with virtual devices to process heavy workloads such as video streams sent through a 5G connection.

At ProX PC, we provide an end-to-end computer vision platform to build, deploy and operate AI vision applications. ProX PC provides a complete edge device management to securely roll out applications with automated deployment capabilities and remote troubleshooting.

The edge-to-cloud architecture of ProX PC supports seamlessly enrolling not only physical but also virtual edge devices. In collaboration with Intel engineers, we’ve integrated the virtualization capabilities to seamlessly enroll virtual edge devices on MEC servers.

As a result, organizations can build and deliver computer vision applications using the low-latency and scalable Multi-access Edge Computing infrastructure. For example, in Smart City, the MEC of a mobile network provider can be used to connect IP cameras throughout the city and run multiple real-time AI video analytics applications.

Computer vision in Smart City for traffic analytics – ProX PC

Deployment of Machine Learning Algorithms at the Network Edge

The unprecedented amount of data, together with the recent breakthroughs in artificial intelligence (AI), enables the use of deep learning technology. Edge Intelligence enables the deployment of machine-learning algorithms at the network edge.

The key motivation of pushing learning towards the edge is to allow rapid access to the enormous real-time data generated by the edge devices for fast AI-model training and inferencing, which in turn endows on the devices human-like intelligence to respond to real-time events.

On-device analytics run AI applications on the device to process the gathered data locally. Because many AI applications require high computational power that greatly outweighs the capacity of resource- and energy-constrained edge devices. Therefore, the lack of performance and energy efficiency are common challenges of Edge AI.

Different Levels of Edge Intelligence

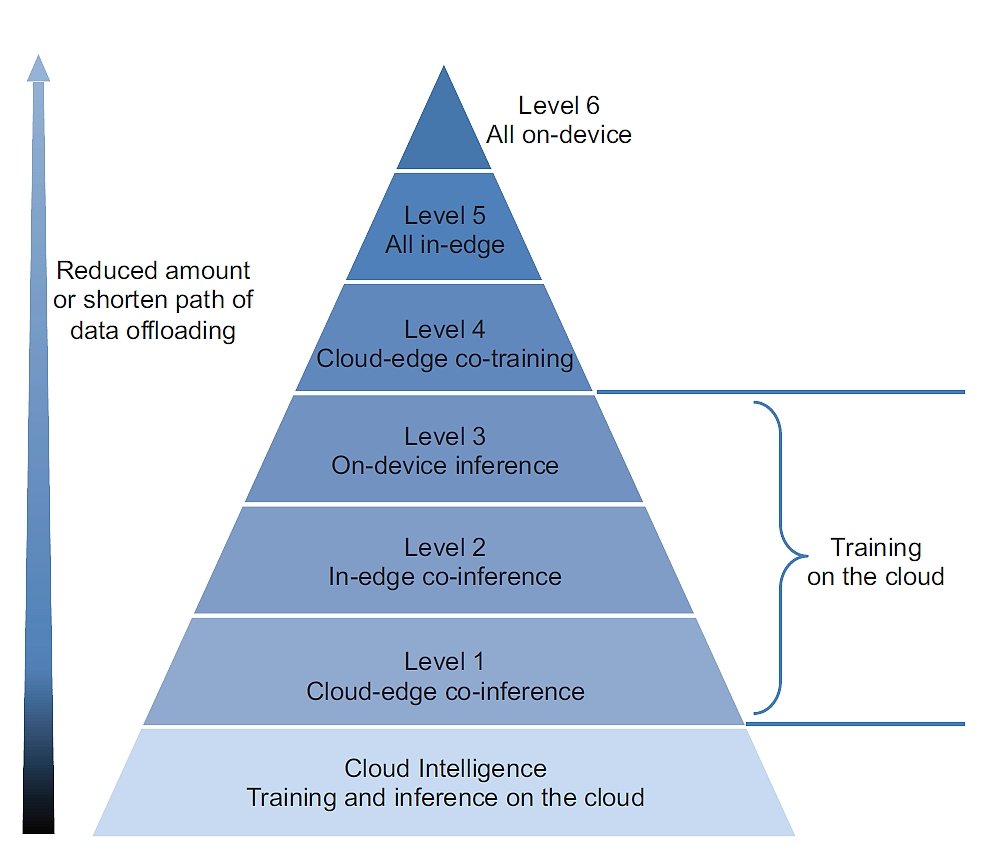

Most concepts of Edge Intelligence generally focus on the inference phase (running the AI model) and assume that the training of the AI model is performed in cloud data centers, mostly due to the high resource consumption of the training phase.

However, the full scope of Edge Intelligence fully exploits available data and resources across the hierarchy of end devices, edge nodes, and cloud data centers to optimize the overall performance of training and inferencing a Deep Neural Network model.

Therefore, Edge Intelligence does not necessarily require the deep learning model to be fully trained or inferenced at the edge. Hence, there are cloud-edge scenarios that involve data offloading and co-training.

Edge Intelligence: Scope of Cloud and Edge Computing.

There is no “best-level” in general because the optimal setting of Edge Intelligence is application-dependent and is determined by jointly considering multiple criteria such as latency, privacy, energy efficiency, resource cost, and bandwidth cost.

By shifting tasks towards the edge, transmission latency of data offloading decreases, data privacy increases, and cloud resource and bandwidth costs are reduced. However, this is achieved at the cost of increased energy consumption and computational latency at the edge.

On-device Inference is currently a promising approach for various on-device AI applications that have been proven to be optimally balanced for many use cases. On-device model training is the foundation of Federated Learning.

Deep Learning On-Device Inference at the Edge

AI models, more specifically Deep Neural Networks (DNNs), require larger-scale datasets to further improve their accuracy. This indicates that computation costs dramatically increase, as the outstanding performance of Deep Learning models requires high-level hardware. As a result, it is difficult to deploy them to the edge, which comes with resource constraints.

Therefore, large-scale deep learning models are generally deployed in the cloud while end devices just send input data to the cloud and then wait for the deep learning inference results. However, the cloud-only inference limits the ubiquitous use of deep learning services:

To address those challenges, deep learning services tend to resort to edge computing. Therefore, deep learning models have to be customized to fit the resource-constrained edge. Meanwhile, deep learning applications need to be carefully optimized to balance the trade-off between inference accuracy and execution latency.

What’s Next for Edge Intelligence and Edge Computing?

With the emergence of both AI and IoT comes the need to push the AI frontier from the cloud to the edge device. Edge computing has been a widely recognized solution to support computation-intensive AI and computer vision applications in resource-constrained environments.

Intelligent Edge, also called Edge AI, is a novel paradigm of bringing edge computing and AI together with the goal of powering ubiquitous AI applications for organizations across industries.

For more info visit www.proxpc.com

Related Products

Share this: